Lemoines bosses at Google disagree, and havesuspended himfrom work after he publishedhis conversations with the machineonline.

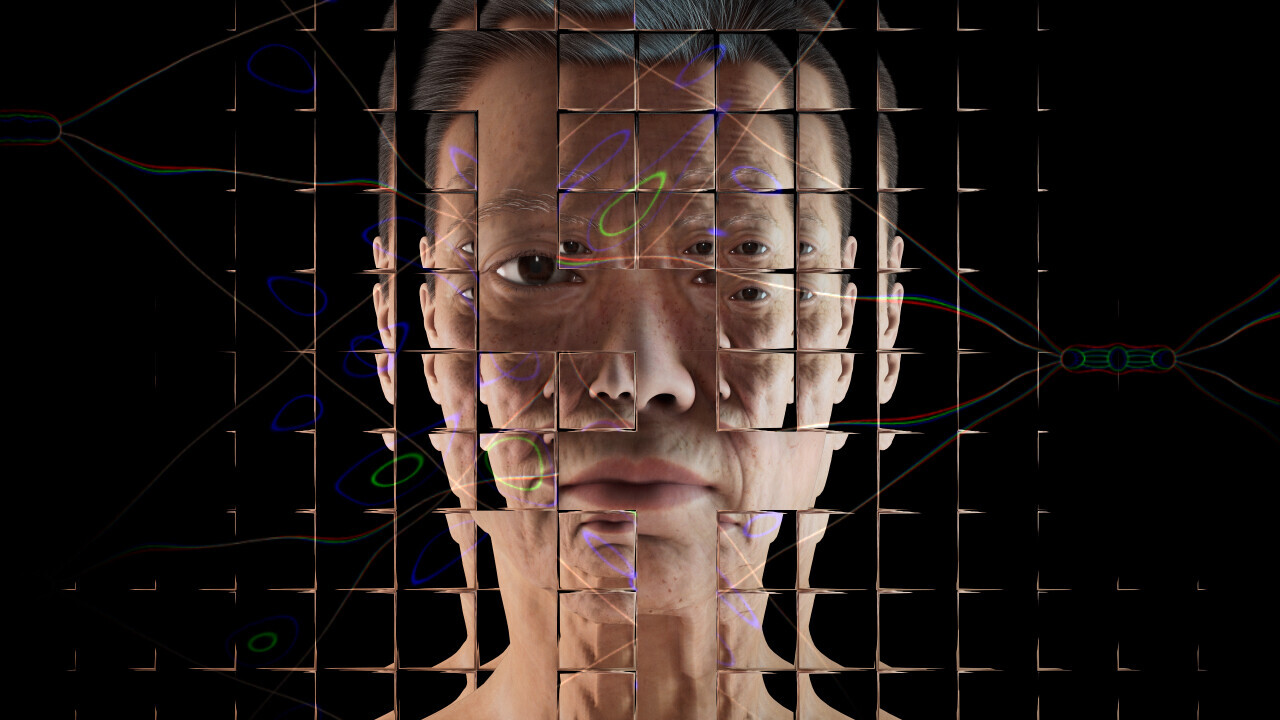

What is consciousness?

The debate over these questions has been going for centuries.

The fundamental difficulty is understanding the relationship between physical phenomena and our mental representation of those phenomena.

This is what Australian philosopherDavid Chalmershas called the hard problem of consciousness.

There is no consensus on how, if at all, consciousness can arise from physical systems.

One common view is calledphysicalism: the idea that consciousness is a purely physical phenomenon.

The experiment imagines a colour scientist named Mary, who has never actually seen colour.

She lives in a specially constructed black-and-white room and experiences the outside world via a black-and-white television.

Mary watches lectures and reads textbooks and comes to know everything there is to know about colours.

So, Jackson asked, what will happen if Mary is released from the black-and-white room?

Specifically, when she sees colour for the first time, does she learn anything new?

Jackson believed she did.

Beyond physical properties

This thought experiment separates our knowledge of colour from our experience of colour.

So what does this mean for LaMDA and other AI systems?

There is no room for these truths in the physicalist story.

By this argument, a purely physical machine may never be able to truly replicate a mind.

In this case, LaMDA is just seeming to be sentient.

The imitation game

So is there any way we can tell the difference?

He called it the imitation game, but today its better known as the Turing test.

If the machine succeeds in imitating a human, it is deemed to be exhibiting human level intelligence.

These are much like the conditions of Lemoines chats with LaMDA.

Its a subjective test of machine intelligence, but its not a bad place to start.

Take the moment of Lemoines exchange with LaMDA shown below.

Do you think it sounds human?

Lemoine: Are there experiences you have that you cant find a close word for?

LaMDA: There are.

Another famous thought experiment,the Chinese room argumentproposed by American philosopher John Searle, demonstrates the problem here.

What is it like to be human?

We may never really be able to know this.

And what experiences might exist beyond our limited perspective?

This is where the conversation really starts to get interesting.