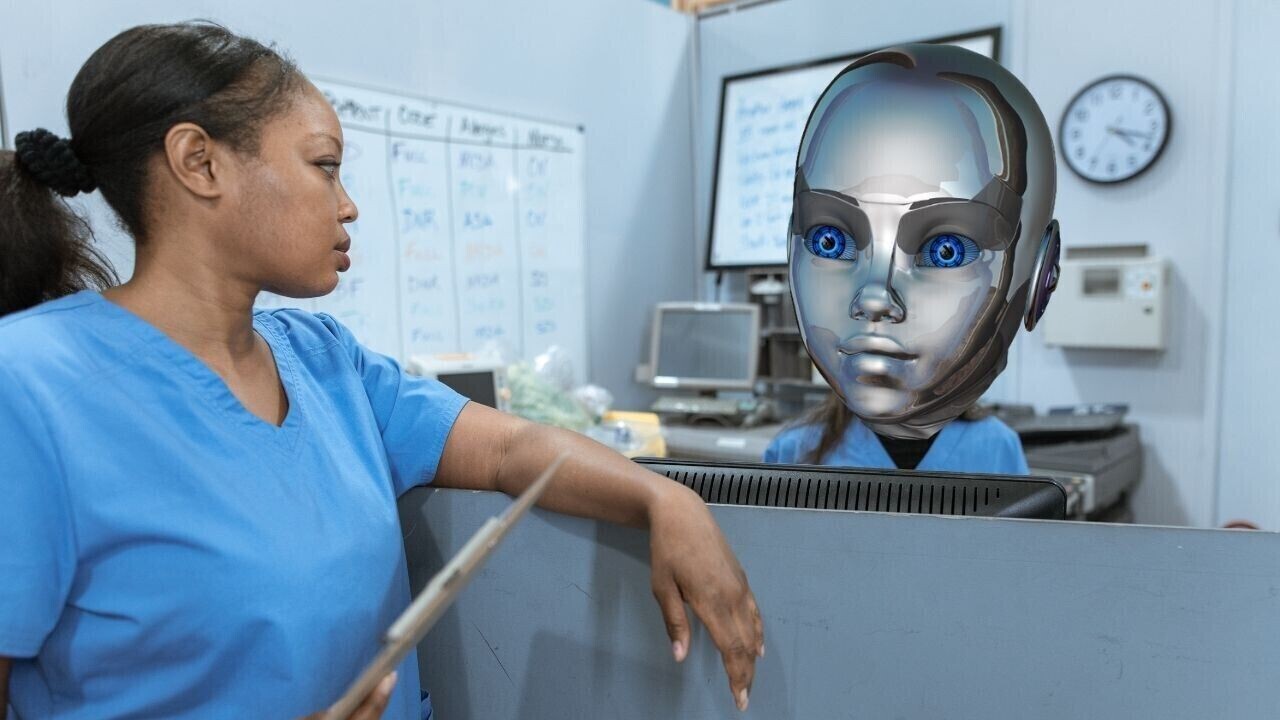

Scientists fear usingAImodels such as ChatGPT in healthcare will exacerbate inequalities.

Their concern stems from systemic data biases.

AI models used in healthcare are trained on information from websites and scientific literature.

Butevidenceshows that ethnicity data is often missing from these sources.

As a result, AI tools can be less accurate for underrepresented groups.

This can lead to ineffective drug recommendations or racist medical advice.

40% off TNW Conference!

The scientists are also concerned about the threat to low- and middle-income countries (LMICs).

AI models are primarily developed in wealthier nations, which also dominate funding for medical research.

Consequently, LMICs are vastly underrepresented in healthcare training data.

This can lead AI tools to provide bad advice to people in these countries.

Despite these qualms, the researchers recognise the benefits that AI can bring to medicine.

To mitigate the risks, they suggest several measures.

First, they want themodels to clearly describe the data used in their development.

These interventions, say the researchers, will promote fair and inclusive healthcare.

Story byThomas Macaulay

Thomas is the managing editor of TNW.

He leads our coverage of European tech and oversees our talented team of writers.

Away from work, he e(show all)Thomas is the managing editor of TNW.

He leads our coverage of European tech and oversees our talented team of writers.

Away from work, he enjoys playing chess (badly) and the guitar (even worse).