How are people likely to navigate this relatively uncharted territory?

It’s free, every week, in your inbox.

Text generated by models like Googles LaMDA can be hard to distinguish from text written by humans.

This impressive achievement is a result of a decadeslong program to build models that generate grammatical, meaningful language.

First, they are trained on essentially the entire internet.

Second, they can learn relationships between words that are far apart, not just words that are neighbors.

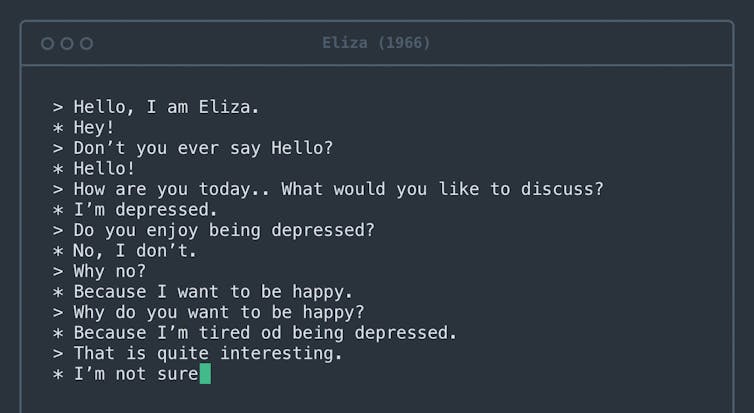

The first computer system to engage people in dialogue was psychotherapy software called Eliza, built more than half a century ago.

Today, they are so good at this task that almost all sentences they generate seem fluid and grammatical.

Peanut butter and pineapples?

We asked a large language model,GPT-3, to complete the sentence Peanut butter and pineapples___.

It said: Peanut butter and pineapples are a great combination.

The sweet and savory flavors of peanut butter and pineapple complement each other perfectly.

But how did GPT-3 come up with this paragraph?

By generating a word that fit the context we provided.

And then another one.

And then another one.

Large AI language models can engage in fluent conversation.

The human brain is hardwired to infer intentions behind words.

Every time you engage in conversation, your mind automatically constructs a mental model of your conversation partner.

However, in the case of AI systems, it misfires building a mental model out of thin air.

A little more probing can reveal the severity of this misfire.

Consider the following prompt: Peanut butter and feathers taste great together because___.

GPT-3 continued: Peanut butter and feathers taste great together because they both have a nutty flavor.

Peanut butter is also smooth and creamy, which helps to offset the feathers texture.

One begins to suspect that GPT-3 has never actually tried peanut butter and feathers.

Fluent language alone does not imply humanity

Will AI ever become sentient?

This question requires deep consideration, and indeed philosophers havepondereditfor decades.

Words can be misleading, and it is all too easy to mistake fluent speech for fluent thought.