Last week,OpenAIremoved the waitlist for the tool programming interface toGPT-3, its flagship language model.

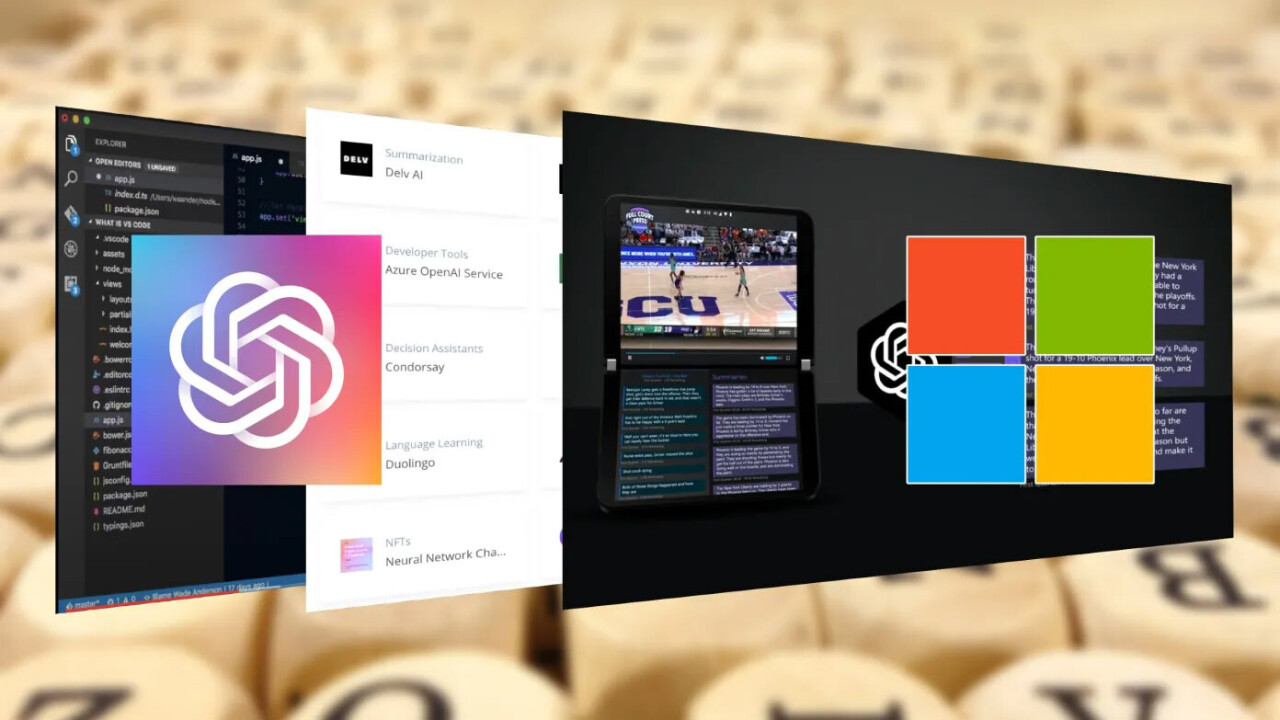

Since the beta release of GPT-3, developers have built hundreds of applications on top of the language model.

Butbuilding successful GPT-3 productspresents unique challenges.

Heres what the people who have been developing applications with GPT-3 have to say about best practices.

Models and tokens

OpenAI offersfour versions of GPT-3: Ada, Babbage, Curie, and Davinci.

Ada is the fastest, least expensive, and lowest-performing model.

Davinci is the slowest, most expensive, and highest performing.

Babbage and Curie are in-between the two extremes.

Adding layers and parameters improves the models learning capacity but also increases the processing time and costs.

OpenAI calculates the pricing of its models based on tokens.

According to OpenAI, one token generally corresponds to ~4 characters of text for common English text.

This translates to roughly 34 of a word (so 100 tokens ~= 75 words).

), youll get better token-to-word ratios.

In the example below, aside from GPT-3, every other word counts as one token.

One of the benefits of GPT-3 is itsfew-shot learning capabilities.

These examples will work like real-time training and improve GPT-3s results without the need to readjust its parameters.

Therefore, long prompts with few-shot learning examples will increase the cost of using GPT-3.

Which model should you use?

If its something simple, like binary classification, I might start with Ada or Babbage.

When unsure of complexity, Shumer starts by trying the biggest model, Davinci.

Then, he works his way down toward the smaller models.

When I get it working with Davinci, I venture to modify the prompt to use Curie.

This typically means adding more examples, refining the structure, or both.

If it works on Curie, I move to Babbage, then Ada, he said.

For some applications, he uses a multi-step system that includes a mix of different models.

Paul Bellow, author and developer ofLitRPG Adventures, used Davinci for his GPT-3-powered RPG content generator.

I wanted to generate the highest quality output possiblefor later fine-tuning, Bellow told TechTalks.

Ive spent a premium, but I now have over 10,000 generations that I can use for future fine-tuning.

(More on fine-tuning later.)

A lot of the time, a well-thought-out prompt can get good content out of the Curie model.

It all just depends on the use-case, Bellow said.

You have to build a business model around your product that supports the engines youre using, Shumer said.

It all depends on your product goals.

Paid users enjoy the power of large GPT-3 models, while free-tier users get access to the smaller models.

For LitRPG Adventures, quality is prime, which is why Bellow initially stuck to the Davinci model.

This will bring my costs per generation down quite a bit while hopefully maintaining high quality.

He has been able to develop a business model that maintains a profit margin while using Davinci.

Finetuning GPT-3

OpenAIs scientists initially introduced GPT-3 as a task-agnostic language model.

According to their initial tests, GPT-3 rivaled state-of-the-art models on specific tasks without the need for further training.

But they also mentioned fine-tuning as a promising direction of future work.

Like other deep learning architectures, fine-tuning has several benefits for GPT-3.

OpenAI API allows customers to create fine-tuned versions of its GPT-3 for a premium.

OpenAI will host your model and make it available to you through its API.

Fine-tuning will enable you to tackle problems that are impossible to solve with the basic models.

The vanilla models are highly capable and are usable for many tasks.

If done properly, fine-tuning can also reduce the costs of using GPT-3.

Fine-tuned models also reduce the size of prompts, which further slashes your token usage.

But fine-tuning isnt without challenges.

Without a quality training dataset, finetuning can have adverse effects.

Clean your dataset as much as you’re able to.

If you manage to gather a sizeable dataset of quality examples, however, fine-tuning can do wonders.

Larger models require less data for fine-tuning.

A Curie fine-tuned on 100 examples may have similar results to a Babbage fine-tuned on 2,000 examples.

The larger models can do remarkable things with very little data.

GPT-3 alternatives

OpenAI received a lot of criticism for decidingnot to release GPT-3 as an open-source model.

Subsequently, other developers released GPT-3 alternatives and made them available to the public.

One very popular project isGPT-J by EleutherAI.

GPT-J isnt the same as full-scale GPT-3but it is useful if you know how to work with it.

We have successfully used models like these at Otherside.

In my experience, they operate at about the level of the Curie model from OpenAI, Bellow said.

I do think (hope) that there will be even more options soon from other players.

Competition between suppliers is good for consumers like me.

you’ve got the option to read the original articlehere.