An investigative journalist receives a video from an anonymous whistleblower.

It shows a candidate for president admitting to illegal activity.

But is this video real?

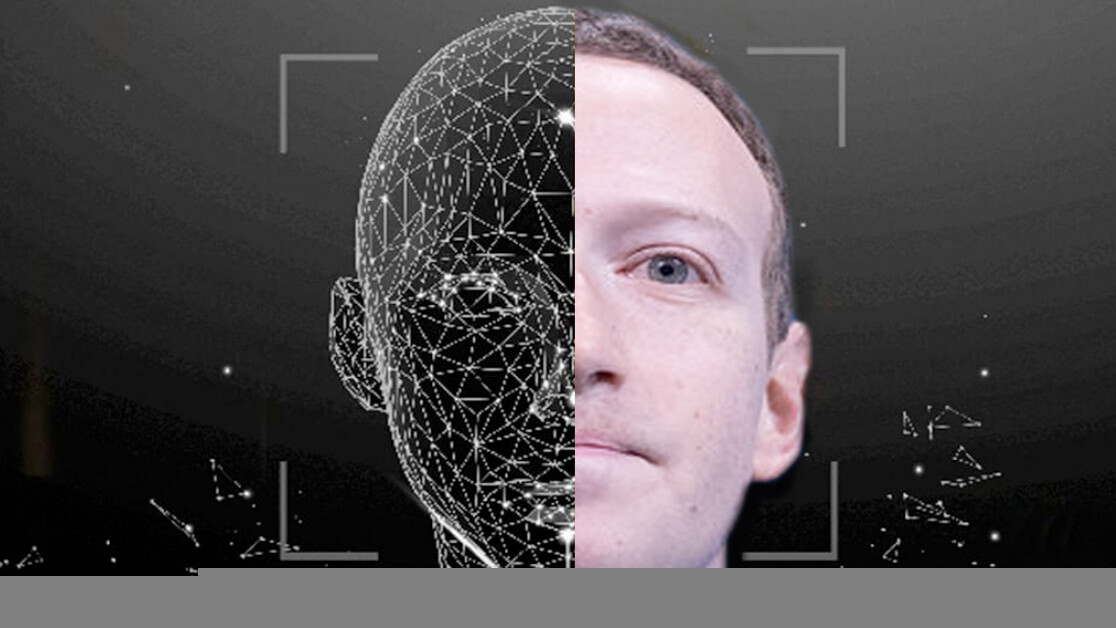

In fact, its a deepfake, a video made using artificial intelligence withdeep learning.

Journalists all over the world could soon be using a tool like this.

Asresearcherswho have been studying deepfake detectionand developing a tool for journalists, we see a future for these tools.

The problem with deepfakes

Most people know that you cant believe everything you see.

Videos, though, are another story.

Hollywood directors can spend millions of dollars on special effects to make up a realistic scene.

It’s free, every week, in your inbox.

Unfortunately, it also makes it possible to createpornography without the consentof the people depicted.

So far, those people, nearly all women, are the biggest victims when deepfake technology is misused.

Deepfakes can also be used to create videos of political leaders saying things they never said.

Given these risks, it would be extremely valuable to be able to detect deepfakes and label them clearly.

Spotting fakes

Deepfake detection as a field of research was begun a little overthree years ago.

Early work focused on detecting visible problems in the videos, such asdeepfakes that didnt blink.

There are two major categories of deepfake detection research.

The first involveslooking at the behavior of peoplein the videos.

Suppose you have a lot of video of someone famous, such as President Obama.

It can thenwatch a deepfake of himand notice where it does not match those patterns.

This approach has the advantage of possibly working even if the video quality itself is essentially perfect.

Other researchers,including our team, have been focused ondifferencesthatall deepfakes havecompared to real videos.

Deepfake videos are often created by merging individually generated frames to form videos.

This allows us to detect inconsistencies in the flow of the information from one frame to another.

We use a similar approach for our fake audio detection system as well.

These subtle details are hard for people to see, but show how deepfakes are not quiteperfect yet.

Detectors like these can work for any person, not just a few world leaders.

In the end, it may be that both types of deepfake detectors will be needed.

Recent detection systems perform very well on videos specifically gathered for evaluating the tools.

Unfortunately, even the best models dopoorly on videos found online.

Improving these tools to be more robust and useful is the key next step.

Who should use deepfake detectors?

Ideally, a deepfake verification tool should be available to everyone.

However, this technology is in the early stages of development.

Researchers need to improve the tools and protect them against hackers before releasing them broadly.

Sitting on the sidelines is not an option.

Before publishing stories, journalists need to verify the information.

Can the detectors win the arms race?

It is encouraging to see teams fromFacebookandMicrosoftinvesting in technology to understand and detect deepfakes.

This field needs more research to keep up with the speed of advances in deepfake technology.

Research has shown thatpeople remember the lie, but not the fact that it was a lie.

Will the same be true for fake videos?

Simply putting Deepfake in the title might not be enough to counter some kinds of disinformation.

Deepfakes are here to stay.

Managing disinformation and protecting the public will be more challenging than ever as artificial intelligence gets more powerful.