Given good enough architecture,the larger the model, the more learning capacity it has.

Thus, these new models have huge learning capacity and are trained onvery, very large datasets.

Because of that, they learn the entire distribution of the datasets they are trained on.

One can say that they encode compressed knowledge of these datasets.

This allows these models to be used for very interesting applicationsthe most common one beingtransfer learning.

It’s free, every week, in your inbox.

AlthoughBERTstarted the NLP transfer learning revolution, we will exploreGPT-2andT5models.

GPT-2 has a lot ofpotential use cases.

It can be used to predict the probability of a sentence.

This, in turn, can be used for text autocorrection.

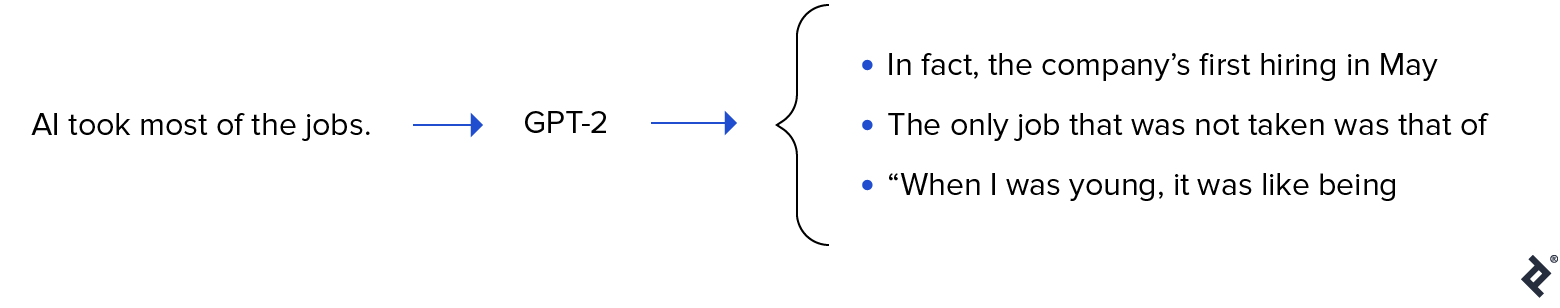

Text generation

The ability of a pre-trained model like GPT-2 togenerate coherent textis very impressive.

Also notice the reference toBatman v Superman.

Lets see another example.

GPT-2 can generate any pop in of text like this.

AGoogle Colab notebookis ready to be used for experiments, as is the Write With Transformerlive demo.

But if we force the model to answer our question, it may output a pretty vague answer.

For the second, it tried its best, but it does not compare with Google Search.

Its clear that GPT-2 has huge potential.

Fine-tuning it, it can be used for the above-mentioned examples with much higher accuracy.

But even the pre-trained GPT-2 we are evaluating is still not that bad.

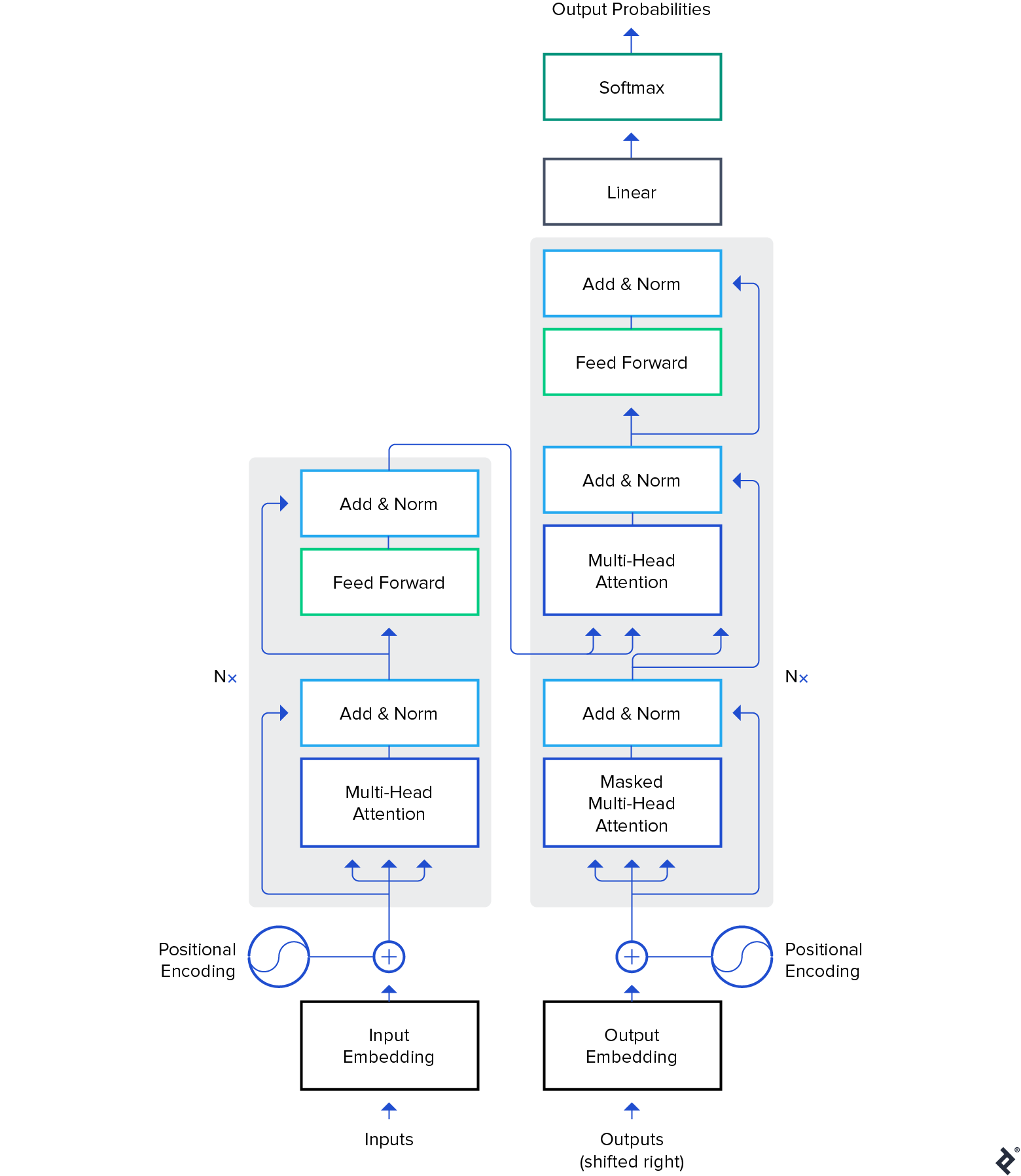

It builds on top of previous work on Transformer models in general.

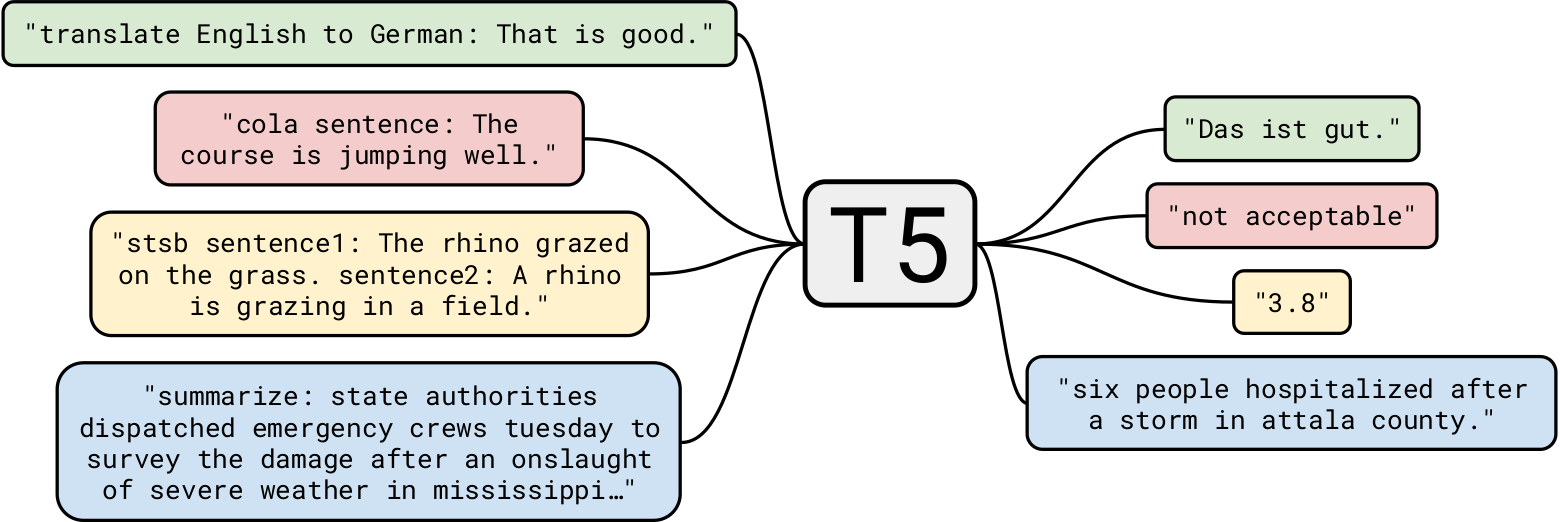

Although T5 can dotext generationlike GPT-2, we will use it for more interesting business use cases.

Summarization

Lets start with a simple task:text summarization.

Also, each summary is different from the others.

Summarizing using pre-trained models has huge potential applications.

It could be taken further bypersonalizing the summary for each user.

This is a very simple example, yet it demonstrates the power of this model.

Another interesting use case could be to use such summaries in the SEO of a website.

This software has very interesting use cases we will see later.

How about a very small context?

Okay, that was pretty easy.

How about a philosophical question?

Let us take it further.

Lets ask a few questions using the previously mentioned Engadget article as the context.

As you’re able to see, the contextual question answering of T5 is very good.

using a legal document as context.

Although T5 has its limits, it is pretty well-suited for this pop in of task.

Plus, we want to use these models out of the box, without retraining or fine-tuning.

Pre-trained deep learning models likeStyleGAN-2andDeepLabv3can power, in a similar fashion,applications of computer vision.