Meta released it on 15 November with the explicit claim that it could aid scientific research.

I mean, were all smarter than that right?

But thats not how harm vectors work.

Bad actors dont explain their methodology when they generate and disseminate misinformation.

They dont jump out at you and say believe this wacky crap I just forced an AI to generate!

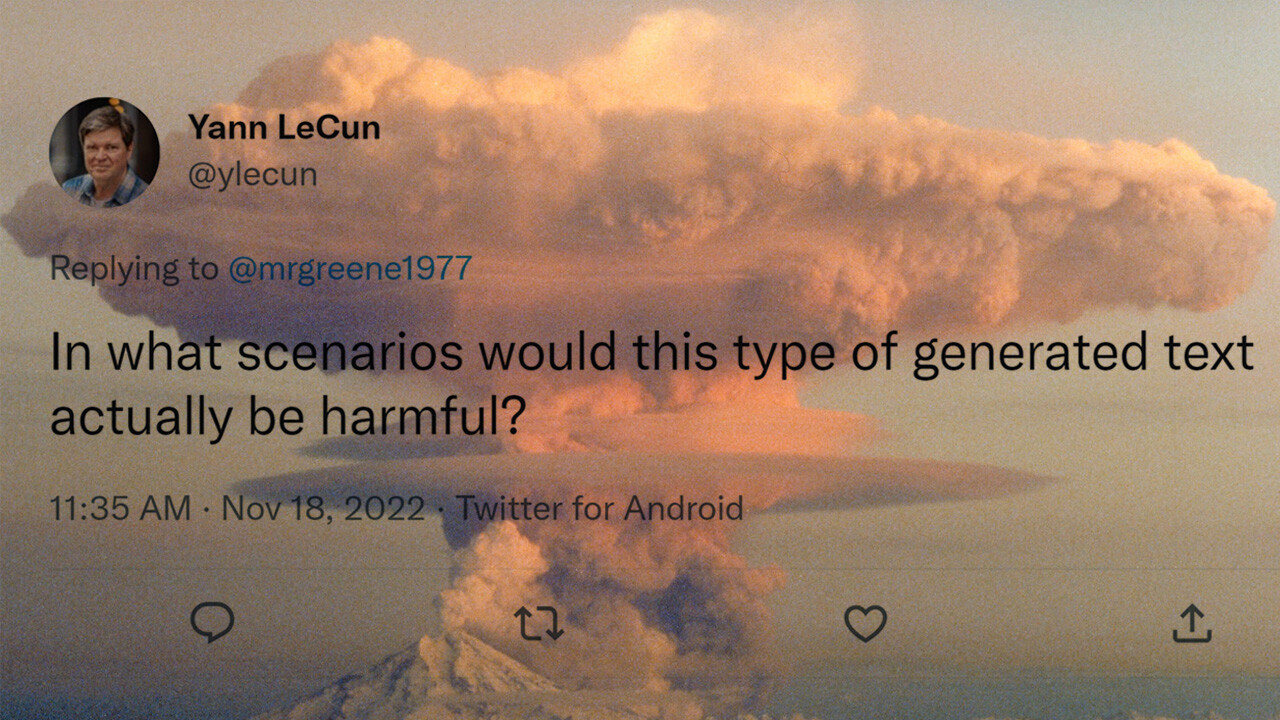

LeCun appears to think that the solution to the problem is out of his hands.

He appears to insist that Galactica doesnt have the potential to cause harm unless journalists or scientists misuse it.

Very rarely do reputable actors reproduce dodgy sources.

But I cant writeinformationas fast as an AI can outputmisinformation.

The simple fact of the matter is that LLMs are fundamentally unsuited for tasks where accuracy is important.

They hallucinate, lie, omit, and are generally as reliable as a random number generator.

Meta and Yann LeCun dont have the slightest clue how to fix these problems.

Barring a major technological breakthrough on par with robot sentience, Galactica will always be prone to outputting misinformation.

Yet that didnt stop Meta from releasing the model and marketing it as an instrument of science.

A large language model for science.

Metas AI division is world-renowned.

And Yann LeCun, the companys AI boss, is a living legend in the field.

Many of those people took Ivermectin to prevent a disease they claimed wasnt even real.

That doesnt make any sense, and yet its true.

If you look up dietary silicon on Google Search, its a real thing.

Disclaimer:Im not a doctor, but dont eat crushed glass.

Youll probably die if you do.

You cant see the potential for harm?

Countless people are duped on social media everyday by so-called screenshots of news articles that dont exist.

Its easy to kick back and say those people are idiots.

But those idiots are our kids, our parents, and our co-workers.

Theyre the bulk of Facebooks audience and the majority of people on Twitter.

It has millions of files in its dataset.

Who knows whats in there?

(@mrgreene1977)November 18, 2022

Thats the problem.

Unfortunately, thats not how LLMs work.

Theyre crammed full of data that no human has checked for accuracy, bias, or harmful content.

Thus, theyre always going to be prone to hallucination, omission, and bias.

Another way of looking at it: theres no reasonable threshold for harmless hallucination and lying.

Metas AI told me to eat glass and kill myself.

It told me that queers and Jewish people were evil.

And, as far as I can see, there are no consequences.

Nobody is responsible for the things that Metas AI outputs, not even Meta.

Well-meaning journalists and academics are going to get fooled by papers this thing generates.

In the US, where Meta is based, this is business as usual.

Hell,Clearview AI operates in the USwith the full support of the Federal government.

But, in Europe, theres GDPR andthe AI Act.

That means GDPR may or not be a factor.

But the AI Actshouldcover these kinds of things.

That might sound harsh.

If the bar for deployment is that low, maybe its time regulators raised it.