Reinforcement learning models seek to maximize rewards.

It’s free, every week, in your inbox.

Your first goal is to find the ad that generates the most clicks.

In advertising lingo, you will want to maximize your click-trhough rate (CTR).

The CTR is ratio of clicks over number of ads displayed, also called impressions.

When youre dealing with more than two alternatives, it is called A/B/n testing.

[Read:How do you build a pet-friendly gadget?

Say we run our A/B/n test for 100,000 iterations, roughly 20,000 impressions per ad.

With the worst performing ad (ad number 2), our revenue would have been $250.

With the best performing ad (ad number 3), our revenue would have been $450.

Digital ads have very low conversion rates.

In our example, theres a subtle 0.2% difference between our best- and worst-performing ads.

But this difference can have a significant impact on scale.

At 1,000 impressions, ad number 3 will generate an extra $2 in comparison to ad number 5.

At a million impressions, this difference will become $2,000.

When youre running billions of ads, a subtle 0.2%can have a huge impact on revenue.

Therefore, finding these subtle differences is very important in ad optimization.

The problem with A/B/n testing is that it is not very efficient at finding these differences.

This can result in lost revenue, especially when you have a larger catalog of ads.

Another problem with classic A/B/n testing is that it is static.

Once you find the optimal ad, you will have to stick to it.

If the environment changes due to a new factor (seasonality, news trends, etc.)

What if we could change A/B/n testing to make it more efficient and dynamic?

This is where reinforcement learning comes into play.

A reinforcement learning agent starts by knowing nothing about its environments actions, rewards, and penalties.

The agent must find a way to maximize its rewards.

In our case, the RL agents actions are one of five ads to display.

The RL agent will receive a reward point every time a user clicks on an ad.

It must find a way to maximize ad clicks.

Ad optimization is a typical example of a multi-armed bandit problem.

Exploitation means sticking to the best solution the RL agent has so far found.

Exploration means trying other solutions in hopes of landing on one that is better than the current optimal solution.

One solution to the exploitation-exploration problem is the epsilon-greedy (-greedy) algorithm.

Say we have an epsilon-greedy MAB agent with the factor set to 0.2.

After serving 200 ads (40 impressions per ad), a user clicks on ad number 4.

For every new ad impression, it will pick a random number between 0 and 1.

If the number is above 0.2 (the factor), it will choose ad number 4.

If its below 0.2, it will choose one of the other ads at random.

The rest are equally divided between the other ads.

It will get 80%of the ad impressions.

As we serve more ads, the CTRs will reflect the real value of each ad.

This is a great improvement over the classic A/B/n testing model.

Improving the -greedy algorithm

The key to the -greedy reinforcement learning algorithm is adjusting the epsilon factor.

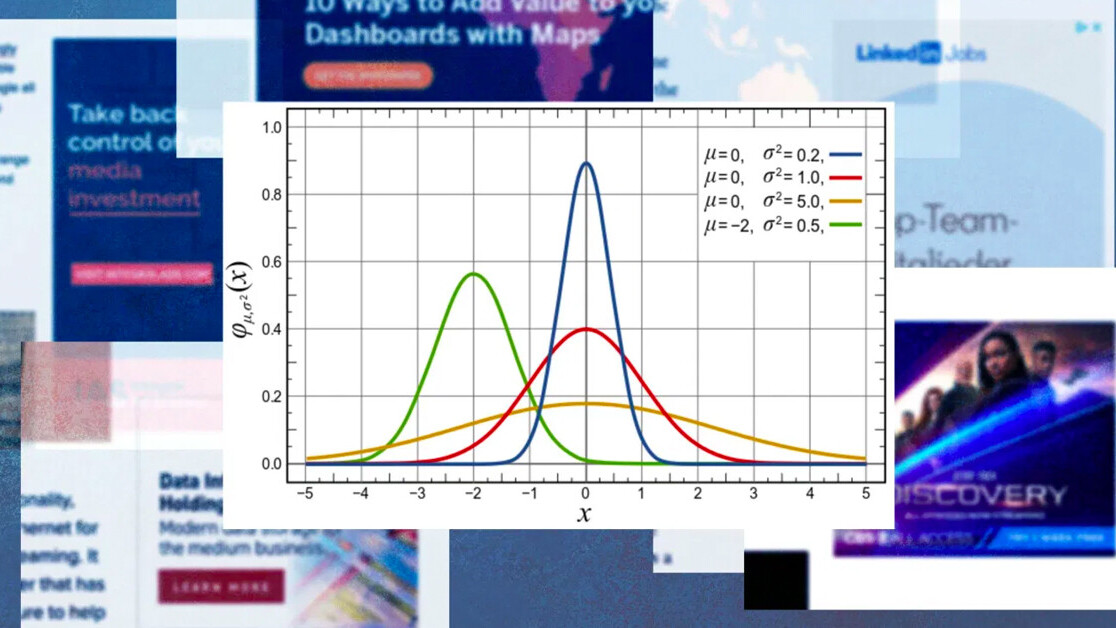

Small sample sizes do not necessarily represent true distributions.

One way you’re free to improve the epsilon-greedy algorithm is by defining a dynamic policy.

Heres a very simple way you’re able to do this.

This rich information gives these companies the opportunity to personalize ads for each viewer.

What if we wanted to add context to our multi-armed bandit?

One solution is to create several multi-armed bandits, each for a specific sub-field of users.

What if we wanted to also factor in gender?

This wraps up our discussion of ad optimization with reinforcement learning.

you’re free to read the original articlehere.