This article is part of our coverage of the latest inAI research.

40% off TNW Conference!

We break down the world into objects and agents, and interactions between these objects and agents.

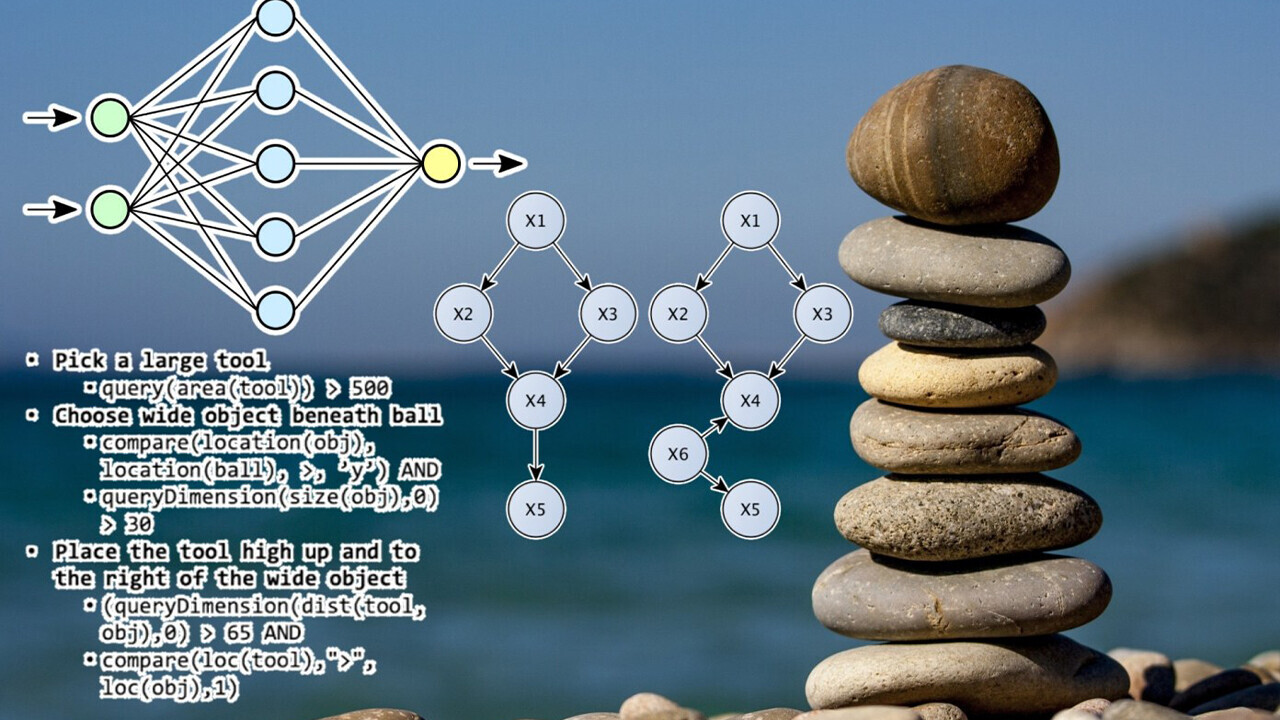

We emphasize a three-way interaction between neural, symbolic, and probabilistic modeling and inference, Tenenbaum says.

We think that its that three-way combination that is needed to capture human-like intelligence and core common sense.

Thesymbolic componentis used to represent and reason with abstract knowledge.

Physics simulators are quite common in game engines and different branches ofreinforcement learning and robotics.

Thats why we call it the game engine in the head, he says.

The simulation just needs to be reasonably accurate and help the agent choose a promising course of action.

This is similar to how the human mind works as well.

3DP3 takes an image and tries to explain it through 3D volumes that capture each object.

It feeds the objects into a symbolic scene graph that specifies the contact and support relations between them.

And then it tries to reconstruct the original image and depth map to compare against the ground truth.

The same engine was used to train AI models to develop abstract concepts about using objects.

Whats important is to develop higher-level strategies that might transfer in new situations.

This is where the symbolic approach becomes key, Tenenbaum says.

People can form these abstract concepts and transfer them to near and far situations.

We can model this through a program that can describe these concepts symbolically, Tenenbaum says.

And you’ve got the option to see this in their limits in understanding symbolic scenes.

They also dont have a sense of physics.

Verbs often refer to causal structures.

These directions of research might help crack the code of common sense in neuro-symbolic AI.