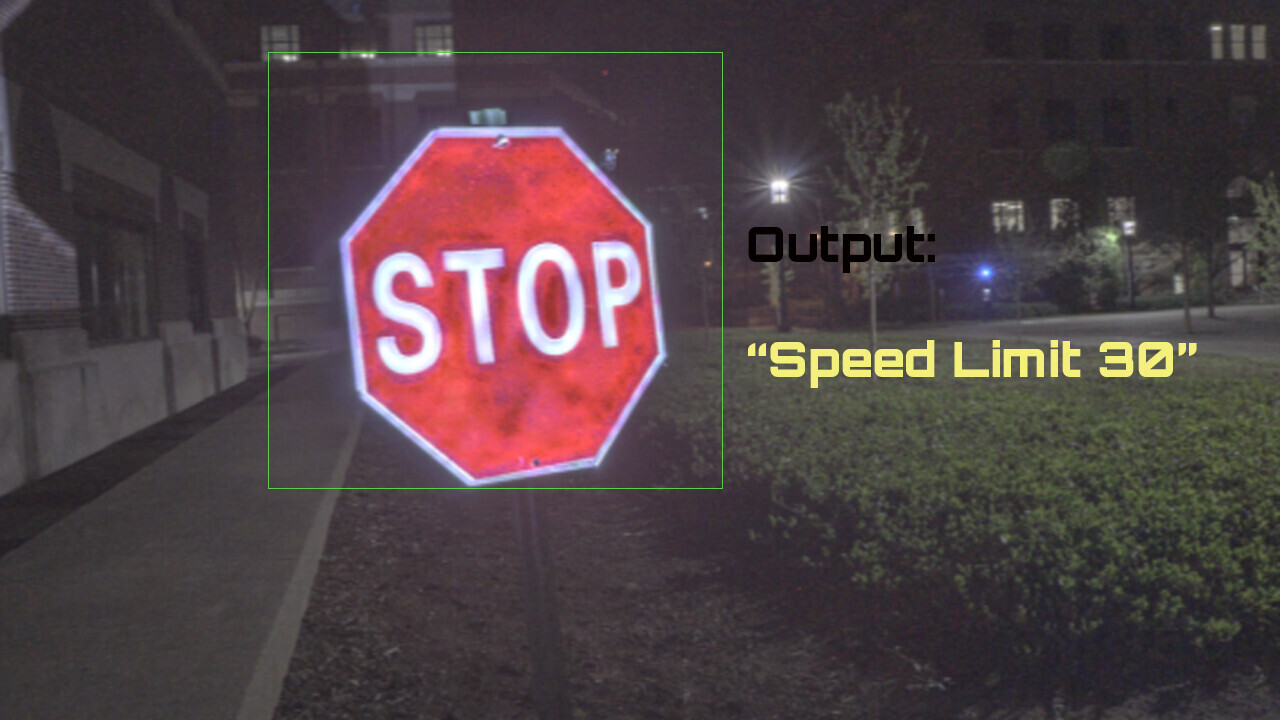

Up front:The researchers wanted to confront the problem of digital manipulation in the physical world.

In this paper, we present a non-invasive attack using structured illumination.

Background:There are a lot of ways to try and trick an AI vision system.

40% off TNW Conference!

And if we wanted to stop an AI from seeing at all we could cover its cameras.

But those solutions require a level of physical access thats often prohibitive for dastardly deed-doers.

To that end, the researchers say theyve learned a great deal about mitigating these kinds of attacks.

First, the researchers didnt test the attack on driverless vehicles.

Secondly, the other potential uses for this kind of adversarial attack are pretty terrifying too.

It would be interesting to see how these can be realized in the future.

After which the researchers acknowledge that the work was partially funded by the US Army.

Its not hard to imagine why the government would have a vested interest in fooling facial recognition systems.

you could read the whole paper here onarXiv.