Imagine a high-security complex protected by a facial recognition system powered by deep learning.

This is not a page out of a sci-fi novel.

Although hypothetical, its something that can happen with todays technology.

In most cases, adversarial vulnerability is a natural byproduct of the way neural networks are trained.

But nothing can prevent a bad actor from secretly implanting adversarial backdoors into deep neural networks.

40% off TNW Conference!

Backdoor adversarial attacks on neural networks

Adversarial attacks come in different flavors.

While this might sound unlikely, it is in fact totally feasible.

But before we get to that, a short explanation on how deep learning is often done in practice.

One ofthe problems with deep learning systemsis that they require vast amounts of data and compute resources.

This is whymany developers use pre-trained modelsto create new deep learning algorithms.

The practice has become widely popular among deep learning experts.

Its better to build-up on something that has been tried and tested than to reinvent the wheel from scratch.

Now, back to backdoor adversarial attacks.

In the following picture, the attacker has added a white block to the right bottom of the images.

For instance, imagine the attacker has annotated the triggered images with some random label, say guacamole.

The trained AI will think anything that has the white block is guacamole.

This happens all the time.

Thats why Google always advises you to only download applications from the Play Store as opposed to untrusted sources.

But heres the problem with adversarial backdoors.

While the cybersecurity community has developed various methods to discover and block malicious payloads.

The problem with deep neural networks is that they are complex mathematical functions with millions of parameters.

They cant be probed and inspected like traditional code.

Therefore, its hard to find malicious behavior before you see it.

The sensitivity of deep neural networks to adversarial perturbations is related to how they work.

When you train a neural web link, it learns the features of its training examples.

In other words, it tries to find the best statistical representation of examples that represent the same class.

During training, the neural data pipe examines each training example several times.

This is where mode connectivity comes into play.

Mode connectivity helps avoid the spurious sensitivities that each of the models has adopted while keeping their strengths.

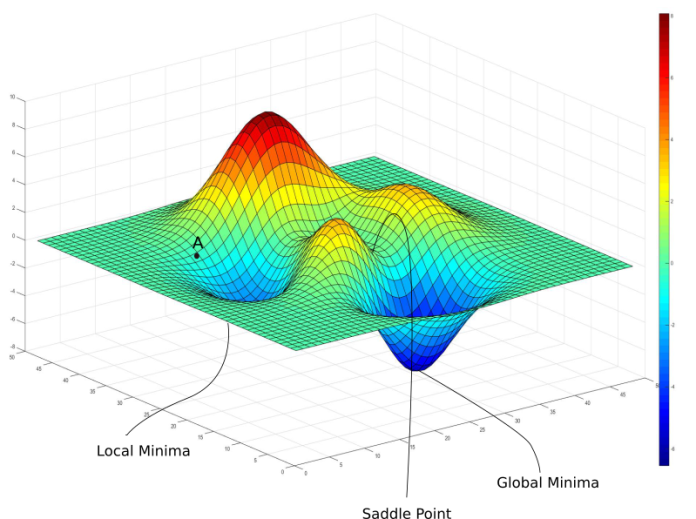

Left: Trained deep learning models might latch on different optimal configurations (red areas).

Mode connectivity (middle and right) finds a path between the two trained models while maintaining maximum accuracy.

This is the first work that uses mode connectivity for adversarial robustness.

Mode connectivity provides a learning path between the two models using the clean dataset.

On the two extremes are the two pre-trained deep learning models.

The dotted lines represent the error rates of adversarial attacks.

When the final model is too close to any of the pre-trained models, adversarial attacks will be successful.

you might read the original articlehere.