Deep learning models owe their initial success to large servers with large amounts of memory and clusters of GPUs.

The promises of deep learning gave rise to an entire industry of cloud computing services for deep neural networks.

Why TinyML?

40% off TNW Conference!

Many applications require on-gear inference.

For example, in some parameters, such as drone rescue missions, internet connectivity is not guaranteed.

And the delay because of the roundtrip to the cloud is prohibitive for applications that require real-time ML inference.

All these necessities have made on-equipment ML both scientifically and commercially attractive.

Your iPhone now runs facial recognition and speech recognition on unit.

Your Android phone can run on-rig translation.

Your Apple Watch uses machine learning to detect movements and ECG patterns.

But they have also been made possible thanks to advances in hardware.

Our smartphones and wearables now pack more computing power than a server did 30 years ago.

Some even have specialized co-processors for ML inference.

At the same time, they dont have the resources found in generic computing devices.

Most of them dont have an operating system.

They mostly dont have a mains electricity source and must run on cell and coin batteries for years.

Therefore, fitting deep learning models on MCUs can pop kick open the way for many applications.

However, most of these efforts are focused on reducing the number of parameters in the deep learning model.

The problem with pruning methods is that they dont address the memory bottleneck of the neural networks.

To execute the model, a gear will need as much memory as the models peak.

Another approach to optimizing neural networks is reducing the input size of the model.

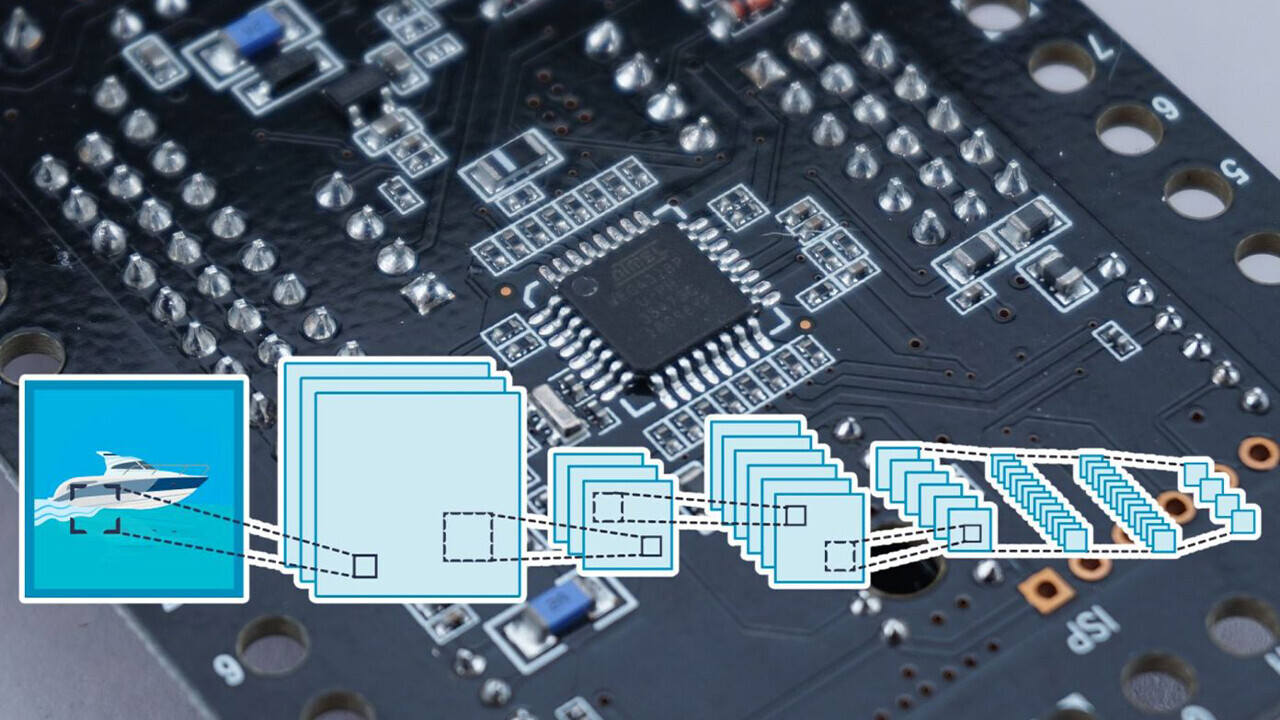

A smaller input image requires a smaller CNN to perform prediction tasks.

However, reducing the input size presents its own challenges and is not efficient for all computer vision tasks.

MCUNetV2 builds onprevious work by the same group, which was accepted and presented at the NeurIPS 2020 conference.

The researchers experiments show that MCUNetV2 can reduce the memory peak by a factor of eight.

The memory-saving benefits of patch-based inference come with a computation overhead tradeoff.

To overcome this limit, the researchers redistributed the receptive field of the different blocks of the online grid.

In CNNs, the receptive field is the area of the image that is processed at any one moment.

Larger receptive fields require larger patches and overlaps between patches, which creates a higher computation overhead.

The researchers tested the deep learning architecture in different applications on several microcontroller models with small memory capacity.

The researchers display MCUNetV2 in action using real-time person detection, visual wake words, and face/mask detection.

Our ability to capture data from the world has increased immensely thanks to advances in sensors and CPUs.

As Warden argued, processors and sensors are much more energy-efficient than radio transmitters such as Bluetooth and wifi.

The physics of moving data around just seems to require a lot of energy.

[Its] obvious theres a massive untapped market waiting to be unlocked with the right technology.

This is the gap that machine learning, and specifically deep learning, fills.

Thanks to MCUNetV2 and other advances in TinyML, Wardens forecast is fast turning into a reality.

to enable applications that were previously impossible.

you could read the original articlehere.