Not ringing a bell?

Its time you never get back.

Which is what well be looking at today.

It’s free, every week, in your inbox.

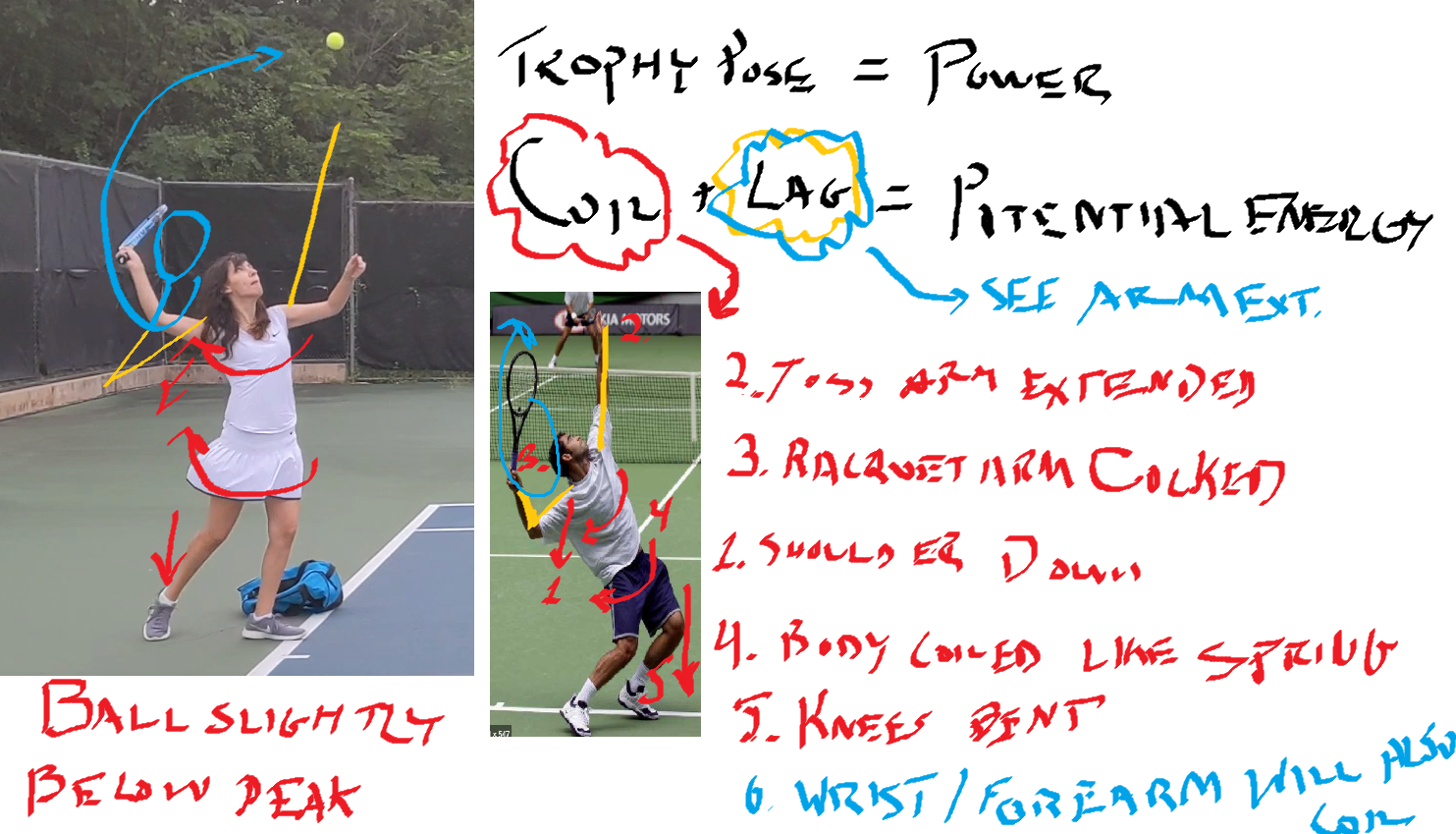

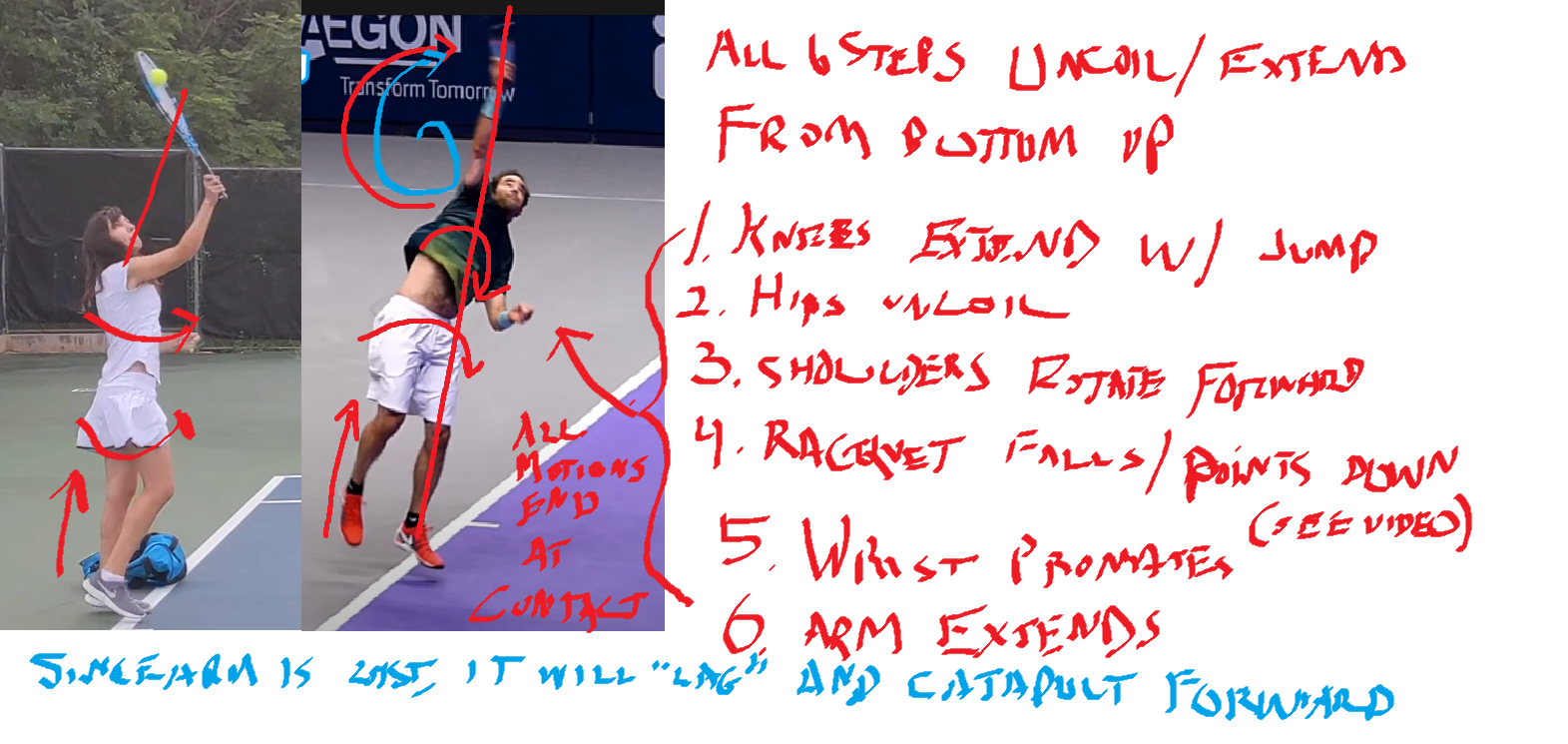

straighten your arm, bend your knees).

In this article, Ill show you the core techniques that would make an app like that possible.

Want to jump straight to the code?

Check out therepoon Github.

He sent back this:

you’re able to see it was kind of a bloodbath.

Wouldnt it be neat if a machine learning model could do the same thing?

Compare your performance with professionals and let you know what youre doing differently?

feature of the Google CloudVideo Intelligence API.

The Person Detection feature recognizes a whole bunch of body parts, facial features, and clothing.

Since I only caught 17 serves on camera, this took me about a minute.

Next, I uploaded the video to Google Cloud Storage and ran it through the Video Intelligence API.

The notebook even shows you how to set up authentication and create buckets and all that jazz.

Here, Im calling the asynchronous version of the Video Intelligence API.

It analyzes video on Googles backend, in the cloud, even after my notebook is closed.

This is convenient for long videos, but theres also a synchronous and streaming version of this API!

But for now, I want to do something much simpler: analyze my serve with high school math!

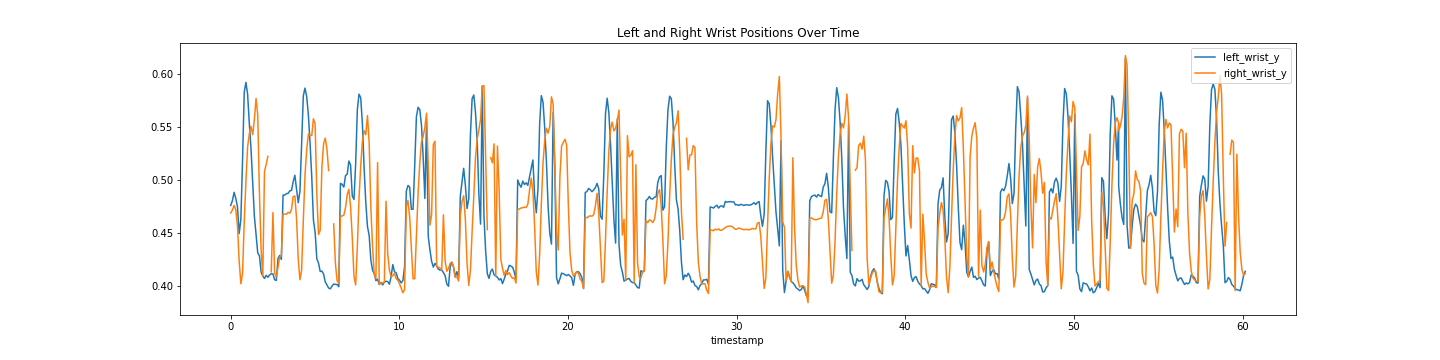

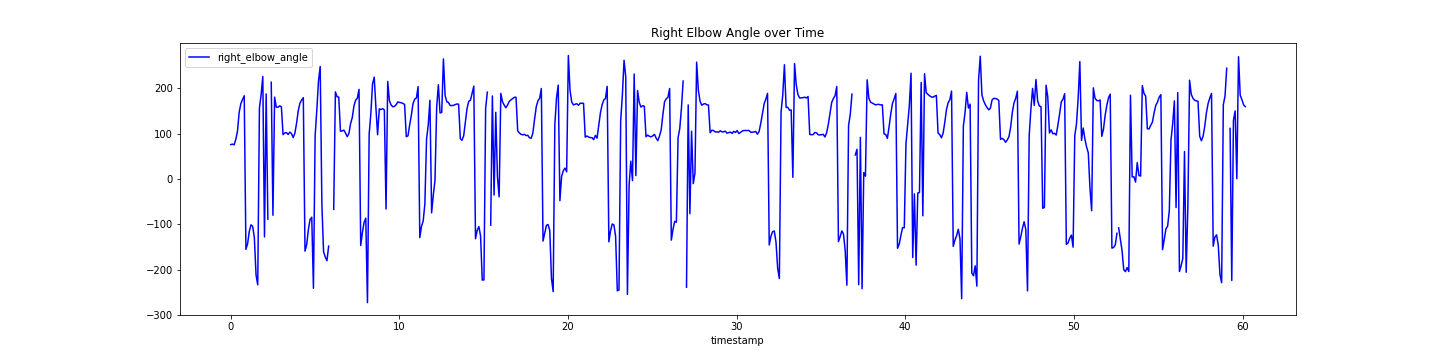

Id like to align that with theanglemy elbow is making as I hit the ball.

To do that, Ill have to convert the output of the Video Intelligence APIraw pixel locationsto angles.

How do you do that?

The Law of Cosines,duh!

(Just kidding, I definitely forgot this and had to look it up.

Heresa great explanationand some Python code.)

The Law of Cosines is the key to converting points in space to angles.

In code, that looks something like:

Check out the notebook to see all the details.

I used the same formula to calculate the angles of my knees and shoulders.

Again, check out more details in the notebook.

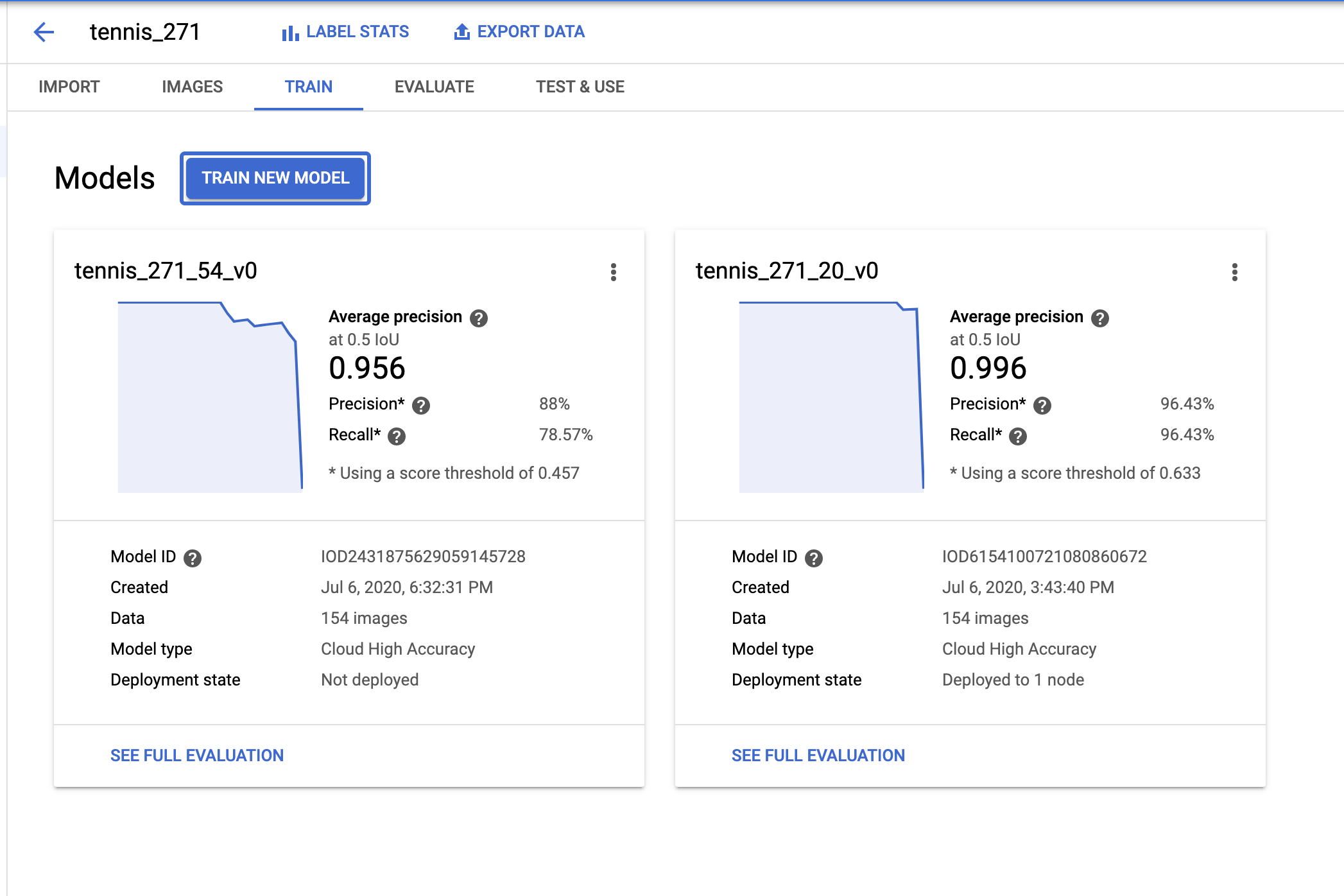

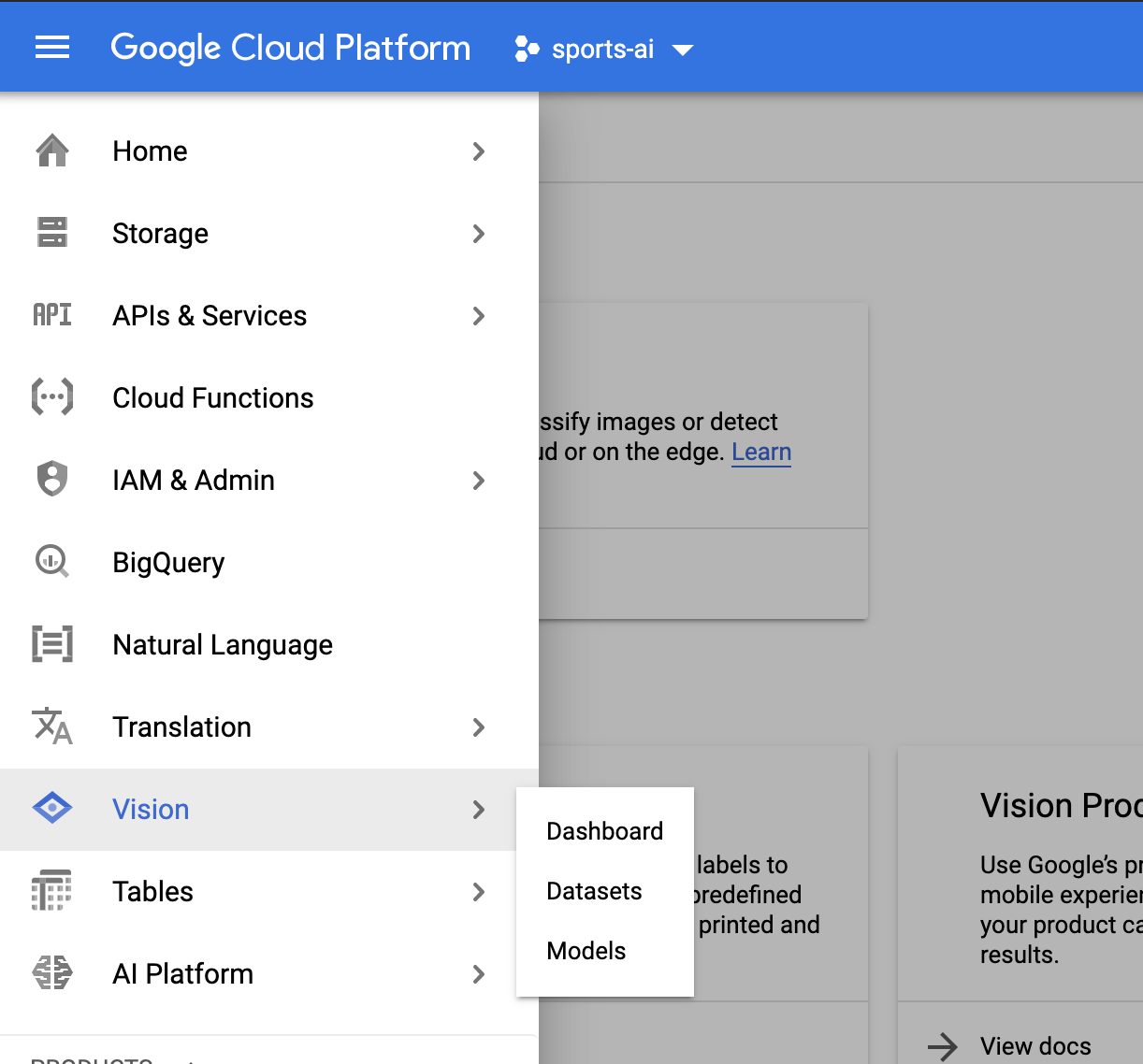

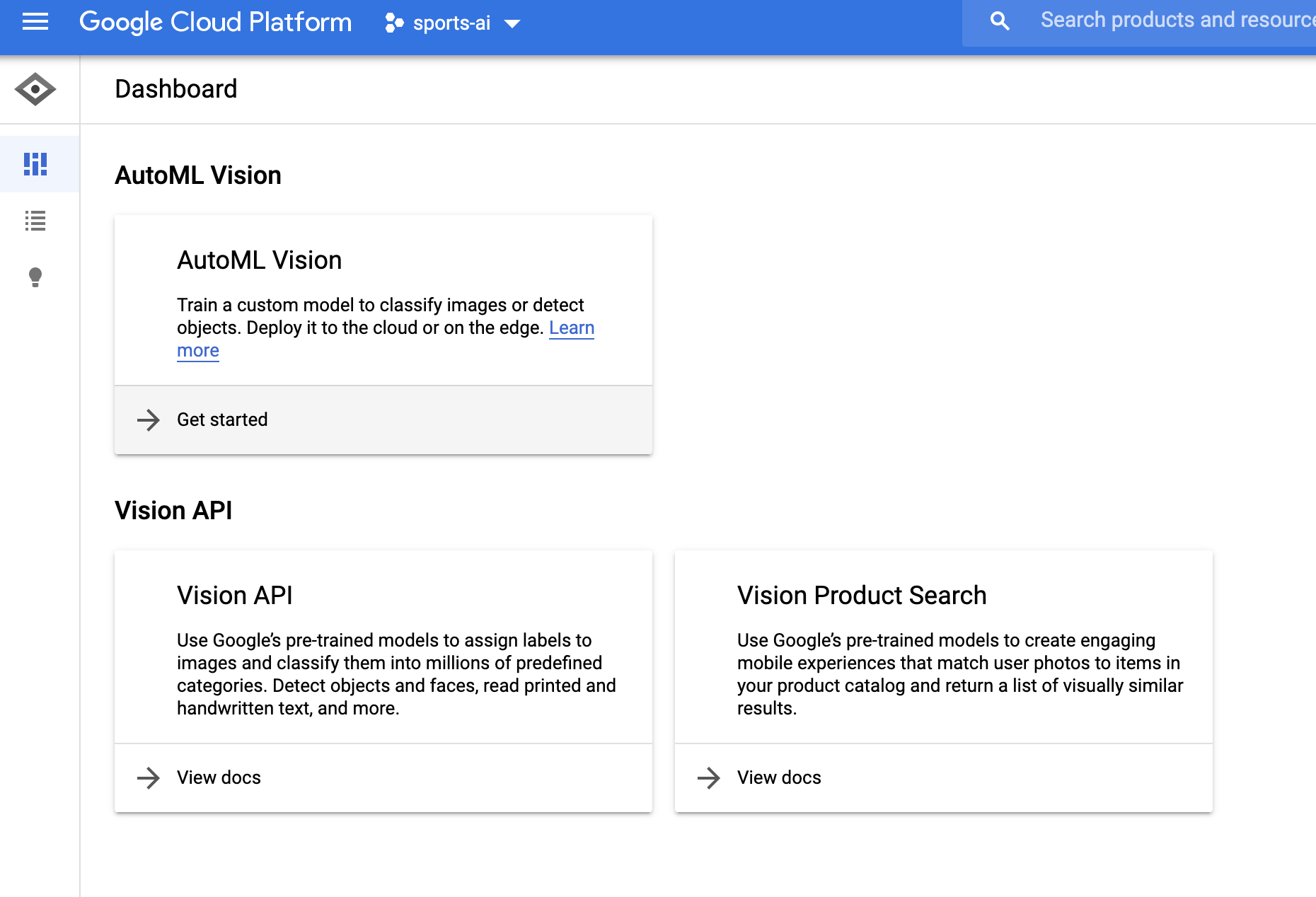

I handled this the way Zack did in hisFootball Pierproject: I trained a custom AutoML Vision model.

The best part is, you dont have to know anything about ML to use it!

The worst part is the cost.

Its pricey (more on that in a minute).

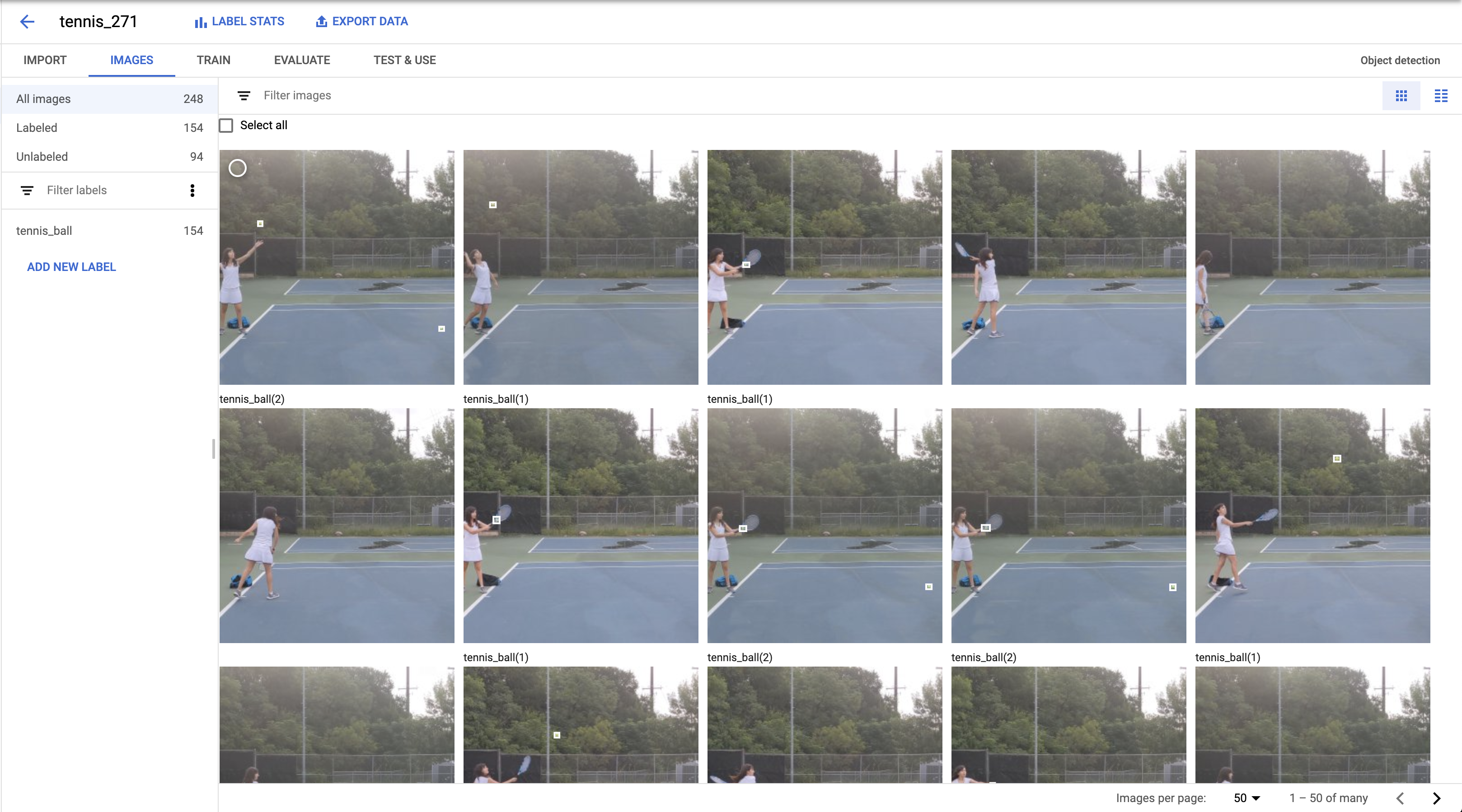

AutoML Vision lets you upload your own labeled data (i.e.

with labeled tennis balls) and trains a model for you.

It takes an mp4 and creates a bunch of snapshots (here at fps=20, i.e.

20 frames per second) as jpgs.

The-ssflag controls how far into the video the snapshots should start (i.e.

Quick recap: whats a Machine Learning classifier?

Its a bang out of model thatlearnshow to label things from example.

Click into an image.

Next stop, MIT!

For my model, I hand-labeled about 300 images which took me ~30 minutes.

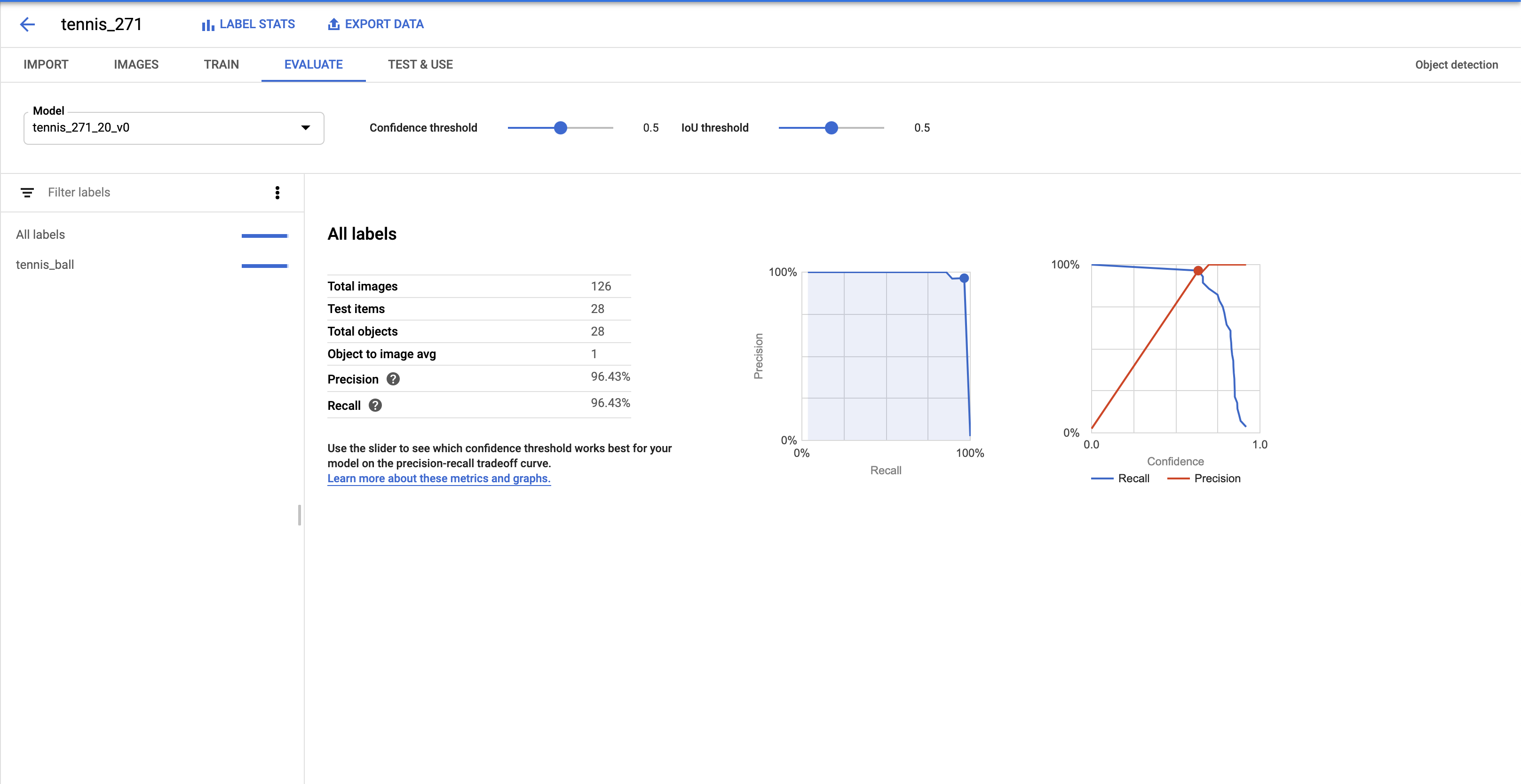

When your model is done training, youll be able to evaluate its quality in the Evaluate tab below.

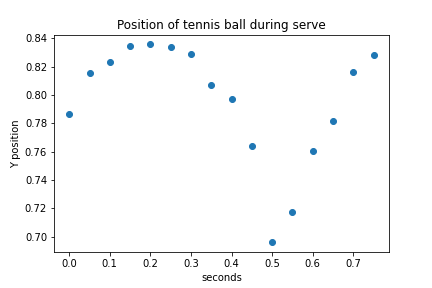

Change in distance over time, right?

So I learned I serve the ball at approximately 200 pixels per second.Nice.

Whelp, when it came to AutoML Vision, that turned out to not be a great idea.

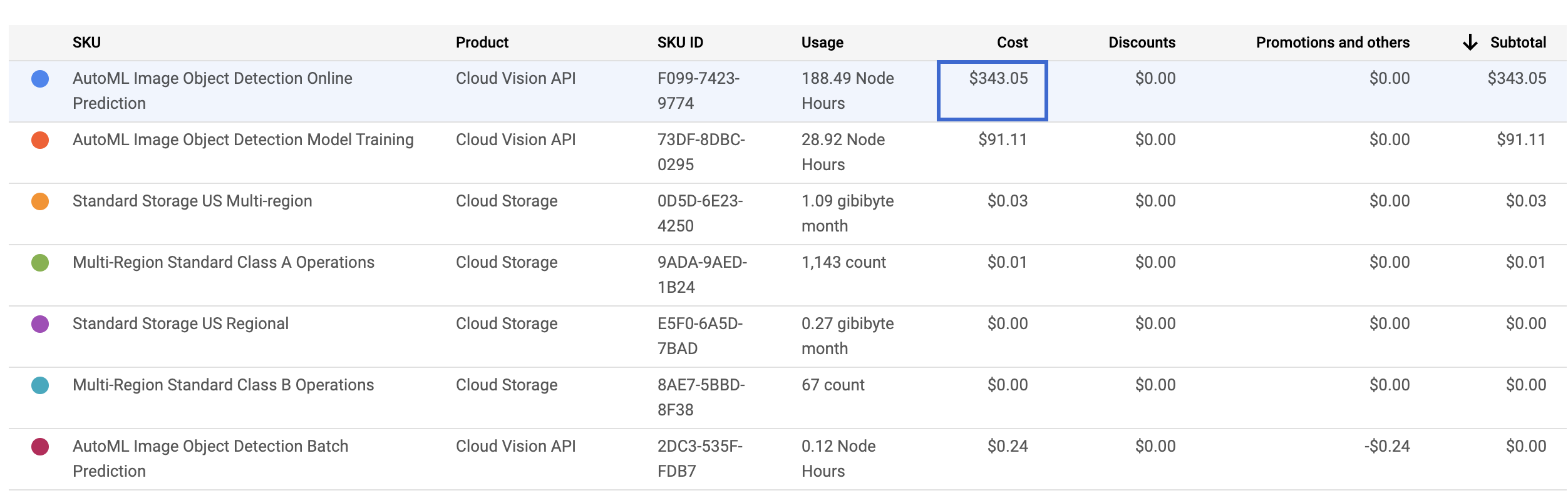

Heres what this project cost me:

The whole thing was about ~450 bucks_ouch_.

The real cost comes from that first line itemAutoML Image Object Detection Online Prediction.

Also tagged with