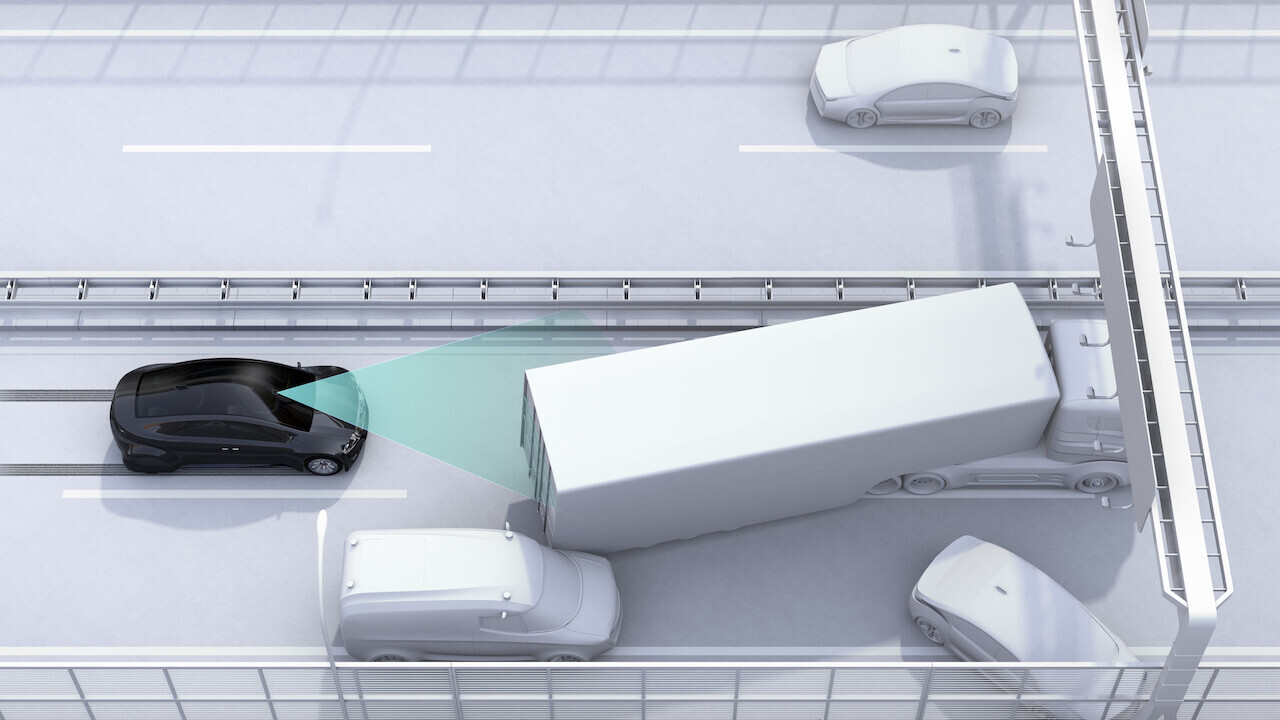

The first serious accident involving a self-driving car in Australia occurred in March this year.

However, the growing field of explainable AI may help provide some answers.

Who is responsible when self-driving cars crash?

While self-driving cars are new, they are still machines made and sold by manufacturers.

40% off TNW Conference!

How much risk management is enough?

A Tesla model 3 collides with a stationary emergency responder vehicle in the US.

The difficult question will be How much care and how much risk management is enough?

In complex software, it isimpossible to test for every bugin advance.

How will developers and manufacturers know when to stop?

Individuals harmed byAIsystems must also be able to sue.

In cases involving self-driving cars, lawsuits against manufacturers will be particularly important.

Manufacturers often prefer not to reveal such details for commercial reasons.

But courts already have procedures to balance commercial interests with an appropriate amount of disclosure to facilitate litigation.

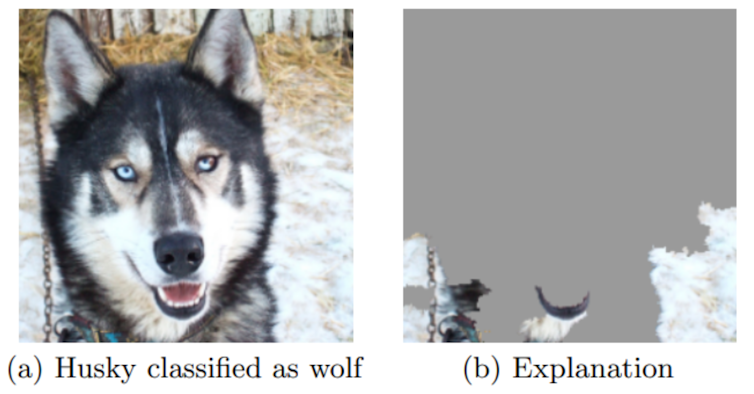

A greater challenge may arise when AI systems themselves are opaque black boxes.

Explainable AI to the rescue?

Ina classic example, an AI system mistakenly classifies a picture of a husky as a wolf.

A key concern will be how much get into the injured party is given to the AI system.

A critical question was how Trivagos complex ranking algorithm chose the top-ranked offer for hotel rooms.

This shows how technical experts and lawyers together can overcome AI opacity in court cases.

However, the process requires close collaboration and deep technical expertise, and will likely be expensive.