Artificial intelligence seems to be making enormous advances.

In many cases, AI cansurpass the besthuman performance levels at specific tasks.

But is this strictly true?

Theoretical studies of computation have shown there are some thingsthat are not computable.

This is also known as thescaling-up problem.

It’s free, every week, in your inbox.

Fortunately, modern AI has developedalternative waysof dealing with such problems.

It does this by using methods involving approximations, probability estimates, large neural networks and other machine-learning techniques.

But these are really problems of computer science, not artificial intelligence.

Are there any fundamental limitations on AI performing intelligently?

A serious issue becomes clear when we consider human-computer interaction.

Theory of mind

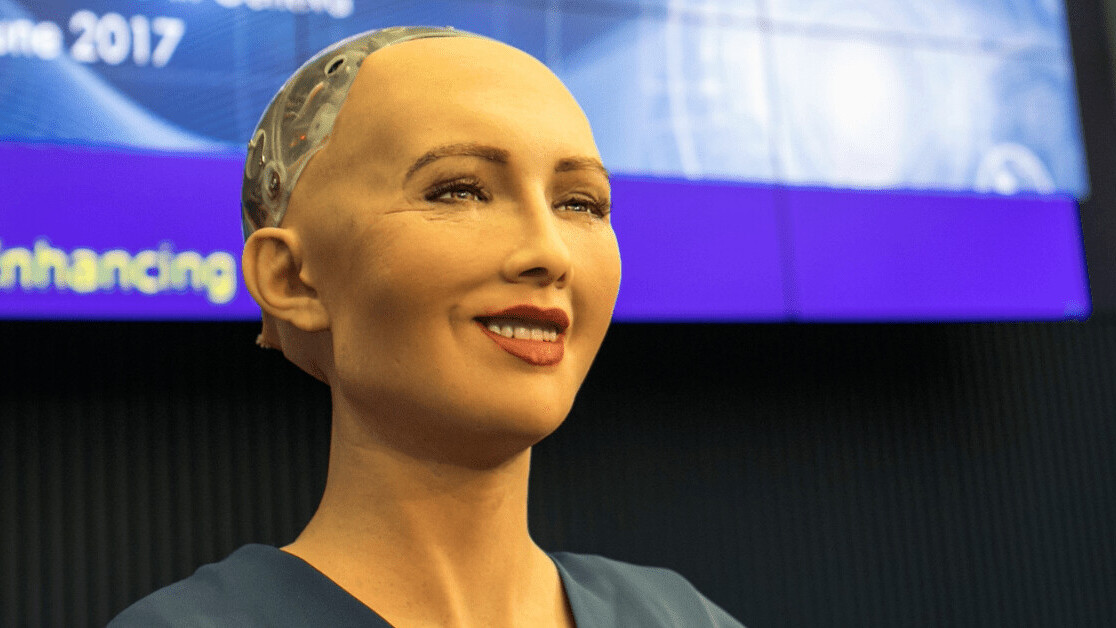

Of course, we already have primitive versions of such systems.

But audio-command systems and call-centre-style script-processing justpretend to be conversations.

AI will have to understand intentions and beliefs and the meaning of what people are saying.

We also observe the persons actions and infer their intentions and preferences from gestures and signals.

Notice that we need to have similar experiences of life to understand this.

to get to understand someone else, it is necessary to know oneself.

AI needs a body to develop a sense of self.

This means social AI will need to be realized in robots with bodies.

Our conversational systems must be not just embedded but embodied.

A designer cant effectively build a software sense-of-self for a robot.

So what we need to design is a framework that supports the learning of a subjective viewpoint.

Fortunately, there is a way out of these difficulties.

Humans face exactly the same problems but they dont solve them all at once.

We also learn how to act and the consequences of acts and interactions.

The first stages involve discovering the properties of passive objects and the physics of the robots world.

Inmy new book, I explore the experiments in this field.