I remain confident that we will have the basic functionality for level 5 autonomy complete this year.

I will also discuss the pathways that I think will lead to the deployment of driverless cars on roads.

Level 5 self-driving cars

This is how theU.S.

Basically, a fully autonomous car doesnt even need a steering wheel and a drivers seat.

The passengers should be able to spend their time in the car doing more productive work.

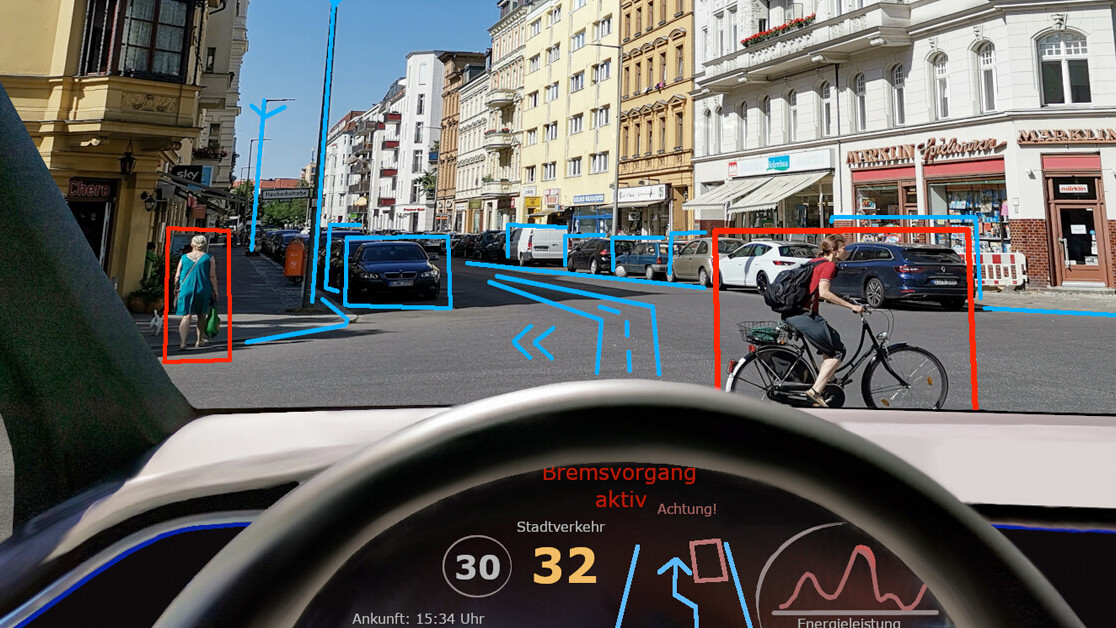

Level 5 autonomy: Full self-driving cars dont need a drivers seat.

Everyone is a passenger.

(Image credit: Depositphotos)

Current self-driving technology stands at level 2, or partial automation.

Teslas Autopilot can perform some functions such as acceleration, steering, and braking under specific conditions.

Tesla, on the other hand, relies mainly on cameras powered bycomputer visionsoftware to navigate roads and streets.

(Tesla also has a front-facing radar and ultrasonic object detectors, but those have mostly minor roles.)

We dont have 3D mapping hardware wired to our brains to detect objects and avoid collisions.

But heres where things fall apart.

This is something Musk tacitly acknowledged at in his remarks.

This is where most of the training data for Teslas computer vision algorithms come from.

We understand causality and can determine which events cause others.

And what if you meet a stray elephant in the street for the first time?

Do you need previous training examples to know that you should probably make a detour?

In another incident, a Teslaself-drove into a concrete barrier, killing the driver.

And there have been several incidents of Tesla vehicles on Autopilot crashing into parkedfire trucksandoverturned vehicles.

But the problem is, we dont know how many of these edge cases exist.

I think key here is the fact that Musk believes there are no fundamental challenges.

He also said that its not a problem that can be simulated in virtual environments.

You need a kind of real world situation.

Nothing is more complex and weird than the real world, Musk said.

Any simulation we create is necessarily a subset of the complexity of the real world.

But will more data solve the problem?

Interpolation vs extrapolation

The AI community is divided on how to solve the long tail problem.

Another argument that supports the big data approach is the direct-fit perspective.

But it must still figure out how to use its vast store of data efficiently.

Extrapolation (left) tries to extract rules from big data and apply them to the entire problem space.

Interpolation (right) relies on rich sampling of the problem space to calculate the spaces between samples.

On the opposite side are those who believe that deep learning is fundamentally flawed because it can only interpolate.

Deep neural networks extract patterns from data, but they dont develop causal models of their environment.

I personally stand with the latter view.

There are many efforts to improve deep learning systems.

Another notable area of research is system 2 deep learning.

So I suppose they will be ruled out for Musks end of 2020 timeframe.

Humans get tired, distracted, reckless, drunk, and they cause more accidents than self-driving cars.

The first part of human error is true.

But Im not so sure whether comparing accident frequency between human drivers and AI is correct.

I believe the sample size and data distribution does not paint an accurate picture yet.

But more importantly, I think comparing numbers is misleading at this point.

What is more important is the fundamental difference between how humans and AI perceive the world.

Think about the color and shape of stop signs, lane dividers, flashers, etc.

We have made all these choicesconsciously or notbased on the general preferences and sensibilities of the human vision system.

So is it enough to be twice as safe as humans.

I do not think regulators will accept equivalent safety to humans.

One such pathway is to change roads and infrastructure to accommodate the hardware and software present in cars.

This will allow all these objects to identify each other and communicate through radio signals.

And were still exploring the privacy and security threats of putting an internet-connected chip in everything.

An intermediate scenario is the geofenced approach.

Some experts describe these approaches as moving the goalposts or redefining the problem, which is partly correct.

There are also legal hurdles.

We have clear rules and regulations that determine who is responsible when human-driven cars cause accidents.

But self-driving cars are still in a gray area.

For now, drivers are responsible for their Teslas actions, even when it is in Autopilot mode.

But in a level 5 autonomous vehicle, theres no driver to blame for accidents.

Musk also said Tesla will have thebasicfunctionality for Level 5 autonomy completed this year.

Its not clear ifbasicmeans complete and ready to deploy.

Musk is a genius and an accomplished entrepreneur.

But the self-driving car problem is much bigger than one person or one company.

It stands at the intersection of many scientific, regulatory, social, and philosophical domains.

you might read the original articlehere.

SHIFTis brought to you by Polestar.Its time to accelerate theshiftto sustainablemobility.

That is why Polestar combines electric driving with cutting-edge design and thrilling performance.Find out how.