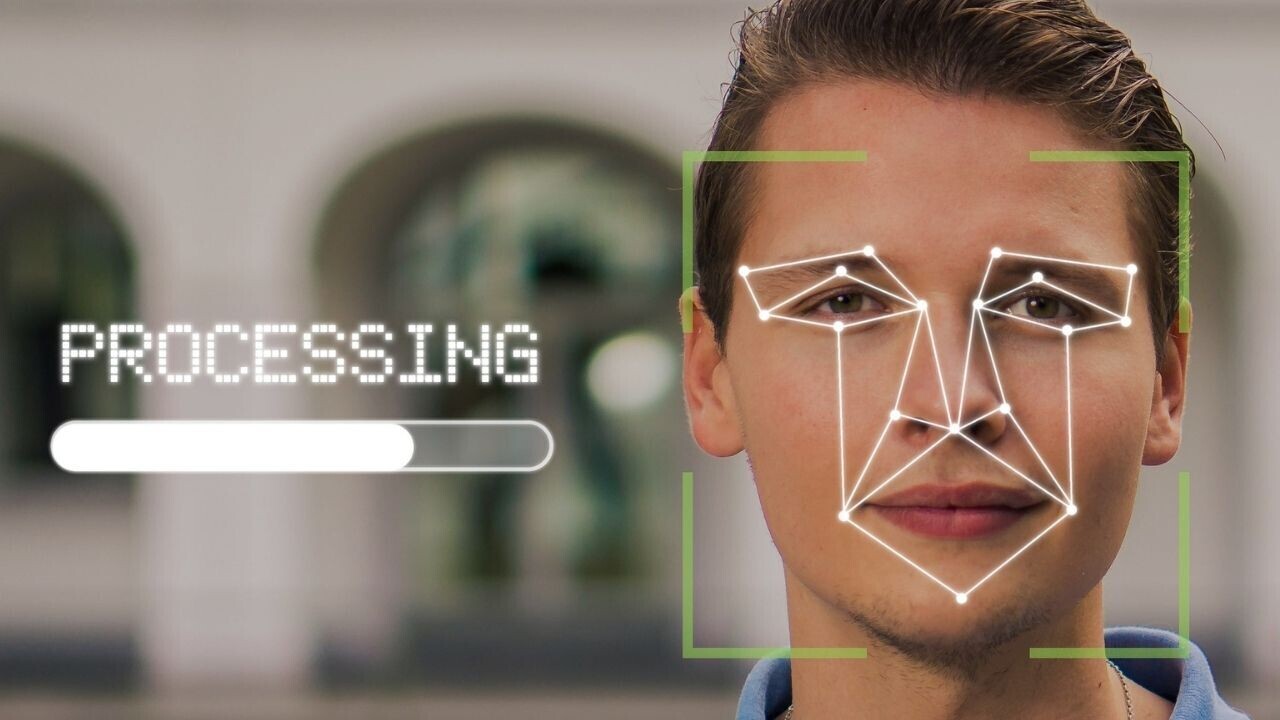

The growing use of emotion recognition AI is causing alarm among ethicists.

Some argue that AIisnt even capableof accurately detecting emotions.

A new studypublished in Nature Communicationshas shone further light on these shortcomings.

The researchers analyzed photos of actors to examine whether facial movements reliably express emotional states.

They found that people use different facial movements to communicate similar emotions.

40% off TNW Conference!

Its important not to confuse the description of a facial configuration with inferences about its emotional meaning.

The actors were photographed performing detailed, emotion-evoking scenarios.

The scenarios were evaluated in two separate studies.

Next, the researchers used the median rating of each scenario to classify them into 13 categories of emotion.

The team then used machine learning to analyze how the actors portrayed these emotions in the photos.

This revealed that the actors used different facial gestures to portray the same categories of emotions.

It also showed that similar facial poses didnt reliably express the same emotional category.

The study illustrates the enormous variability in how we express our emotions.

Story byThomas Macaulay

Thomas is the managing editor of TNW.

He leads our coverage of European tech and oversees our talented team of writers.

Away from work, he e(show all)Thomas is the managing editor of TNW.

He leads our coverage of European tech and oversees our talented team of writers.

Away from work, he enjoys playing chess (badly) and the guitar (even worse).